AI-generated content is everywhere: in classrooms, in marketing decks, on blog posts that are supposed to be original. And as AI writing tools get more advanced, so do the stakes: Will this content rank? Does it sound too robotic to engage readers?

That’s where generative AI detection tools come in. Editors use them to vet writers. Agencies use them to keep their deliverables clean. The problem? These tools aren’t always right.

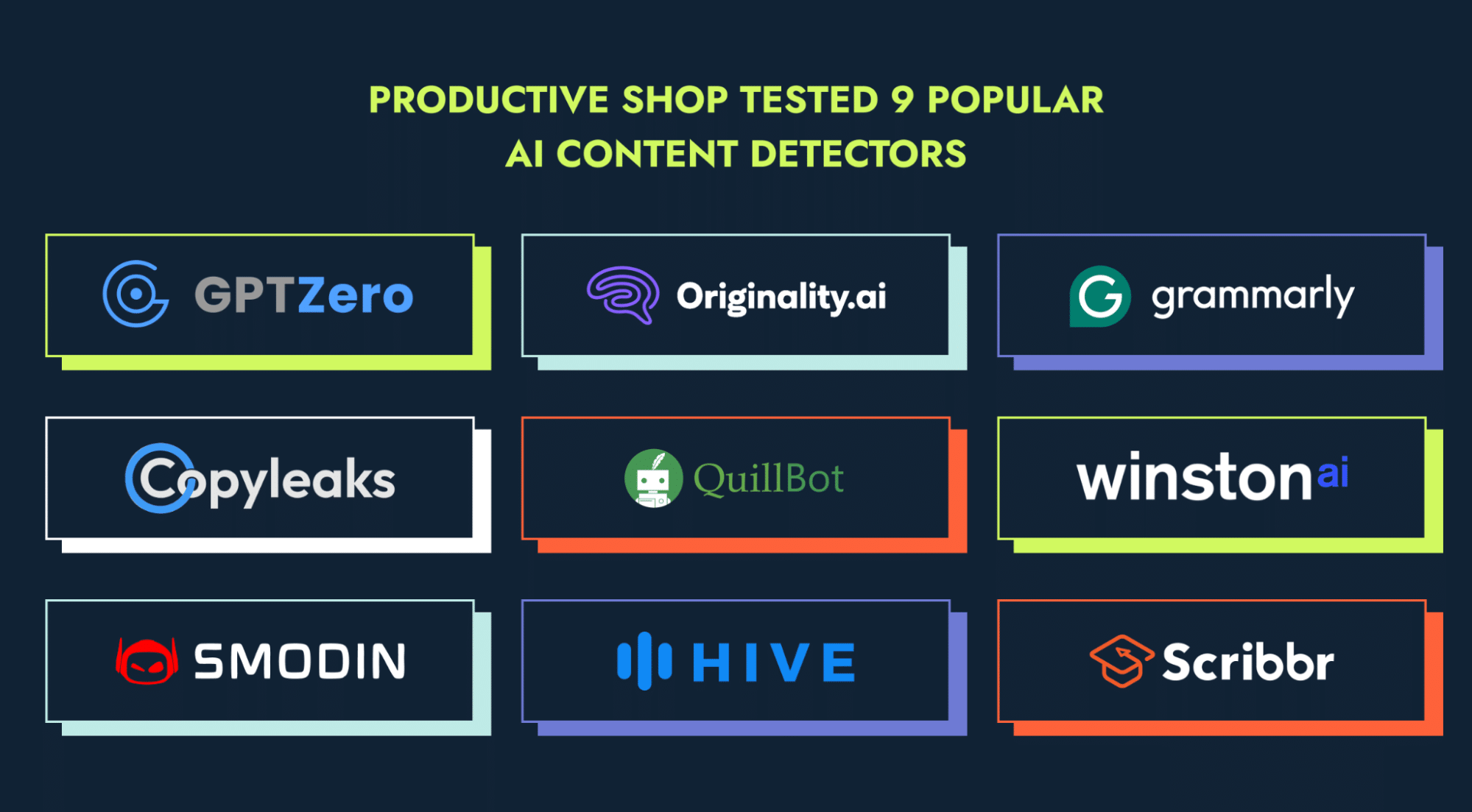

So, we set out to test nine popular AI detecting tools in the market.

Key highlights:

- AI detection tools aren’t perfect, and shouldn’t be treated as absolute proof. But they’re useful for identifying content that feels robotic, repetitive or overly AI-assisted.

- Basic human writing often gets flagged as AI content. Tools like Winston, Copyleaks, Hive and Originality.ai flag overly simple text as AI-written content.

- Expert-level human writing always passes AI detectors with high confidence, confirming that depth and nuance still stand out.

- Human-edited AI content is the gray zone. A few minor tweaks are often enough to fool many AI text detectors. Tools like Quillbot and Smodin are more forgiving (scoring 35–54% AI), while stricter ones like Originality.ai still flagged nearly 100%.

- Quillbot is the most balanced AI content detector tool overall. On the other hand, Originality.ai is the most sensitive to AI-like patterns.

Why we decided to look for the most accurate AI detectors

As an SEO growth agency, we test content for detectable AI patterns all the time.

The reason: content that sounds AI-written doesn’t perform. It might rank temporarily, but it won’t build trust, convert readers or stand up to future algorithm updates. For the technology startups we serve, that’s a risk we can’t ignore.

Clients often ask us, “What’s the ‘most accurate’ AI detector?” So, this experiment finds out how these tools think, where they fall short and when you can trust them.

For editors, marketers and SEO teams, AI patterns matter, not just for detection but also for audience trust and search performance. If your content feels like AI, people will bounce. Search engines will, too.

We weren’t just looking to see which tool gave us the right answer. We wanted to know:

- Can AI content detectors consistently tell human-written text apart from AI-generated content?

- Are these tools sophisticated enough to detect how something is written, and not just what is written?

- And what happens when a human writes in a formulaic or robotic tone? Or when an AI-generated draft is lightly edited by a human?

- Do I really need to pay for an AI content detection software? Are they worth the price? And if yes, which one do I need?

In short: can these AI detectors actually spot creativity, nuance and intent? Or are they just sniffing out surface-level patterns?

How we conducted the experiment to find the best AI writing detector

We kept the test to find the best AI writing detector realistic. Here’s what we did:

- Used only the free versions of each tool (because that’s what most people use)

- Created four intros on the same topic, written by humans, AI and a mix of both

- Ran each version through nine popular AI detection software

- Compared results to see who got it right (and who didn’t)

Some tools flagged human writing as AI-generated. Others let AI-written text slide through. Not one tool was flawless.

Content we tested with the AI detection tools

We created four versions of a text based on a single brief (we wanted to create a close resemblance to how it’d work in real life, where a writer is given a content brief). We will only be using the introductions for the test, as many free AI content detector tools have a word limit.

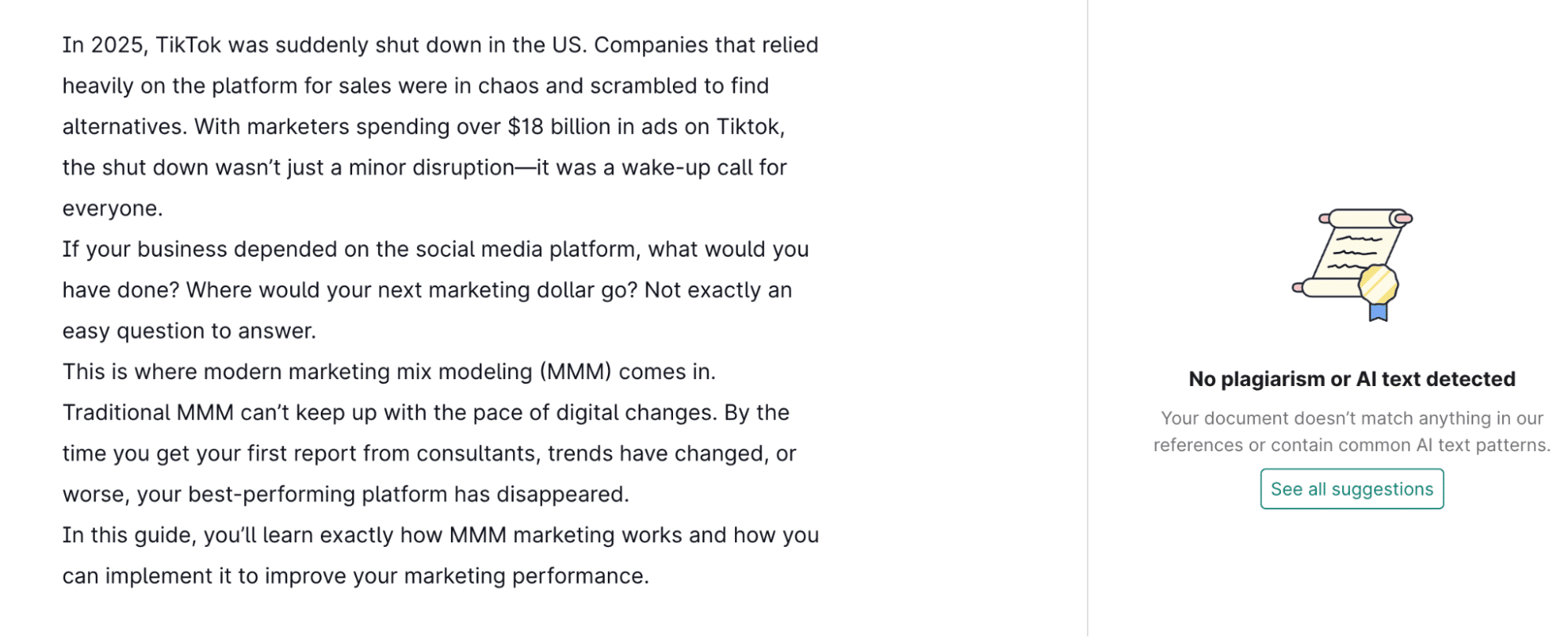

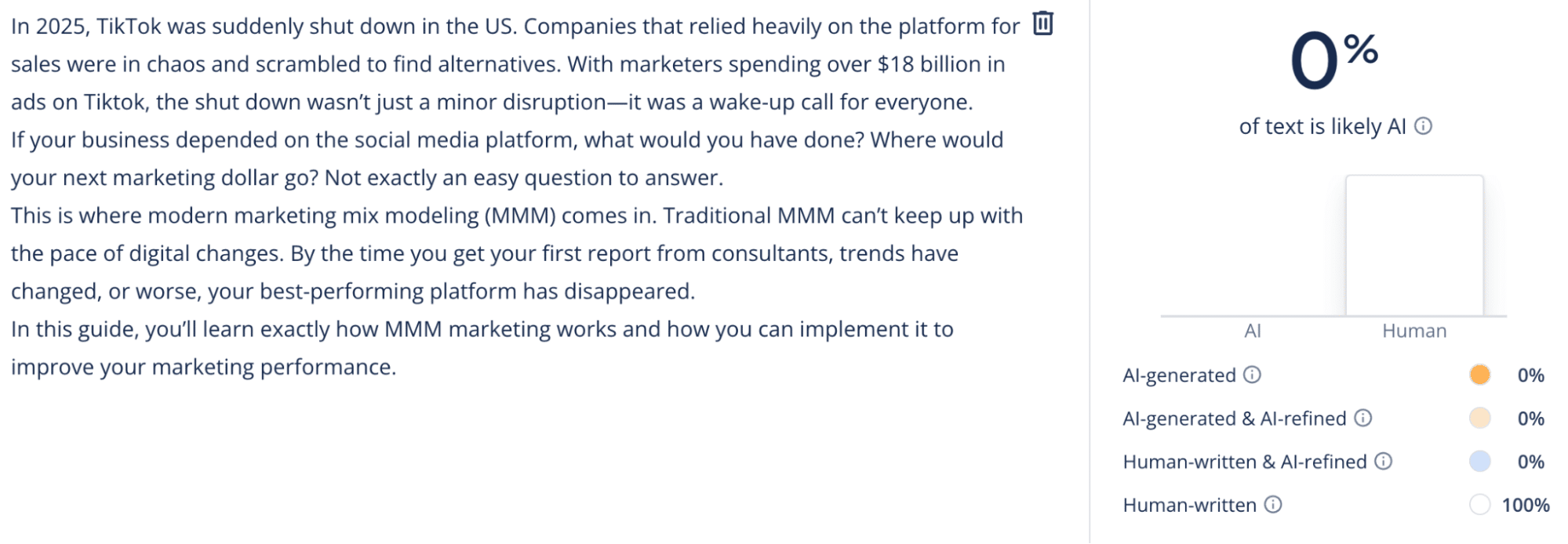

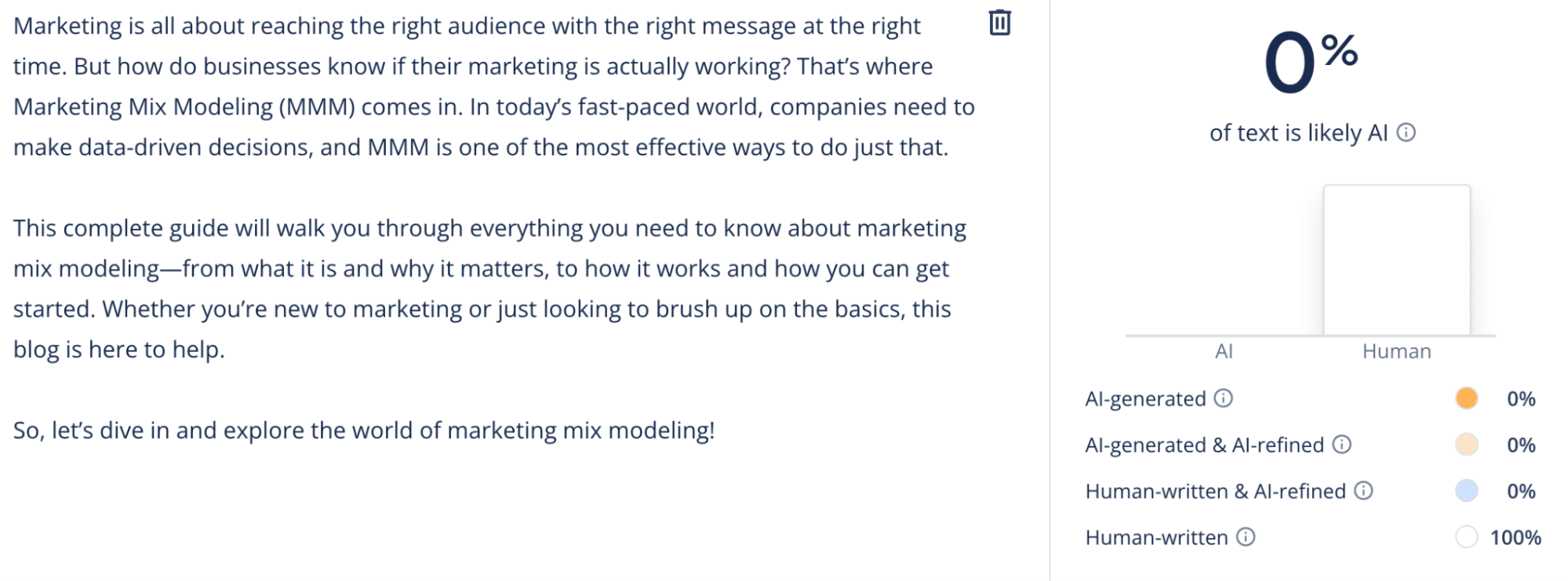

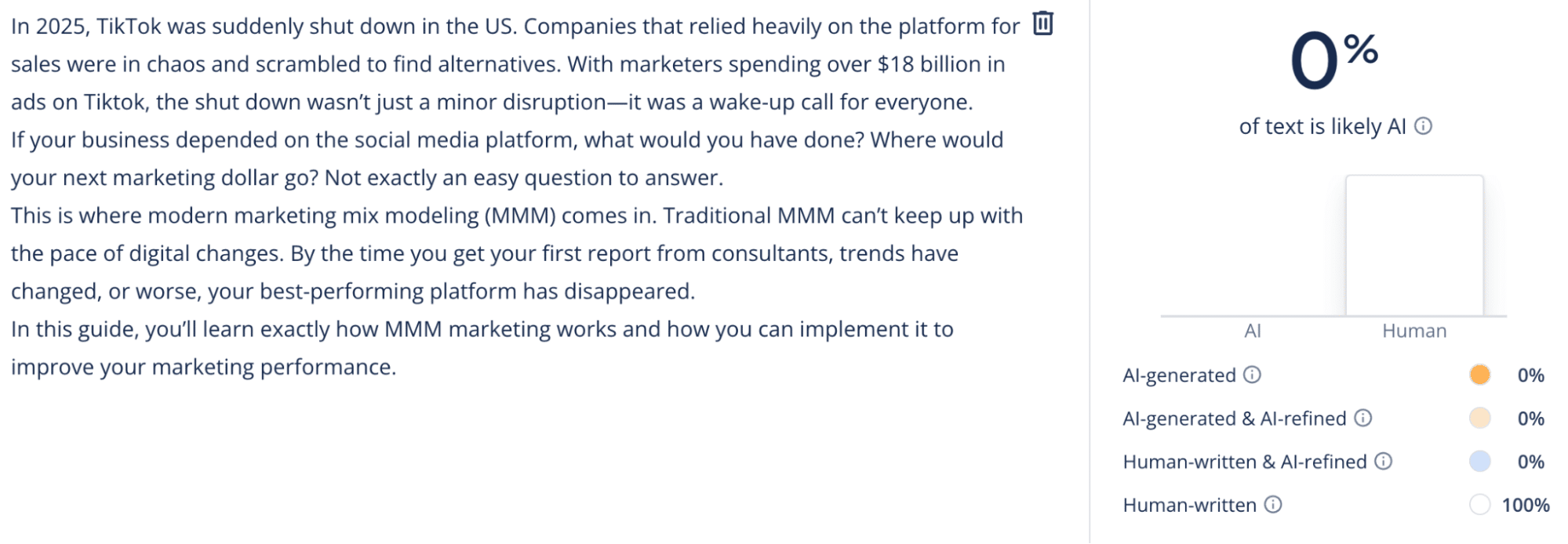

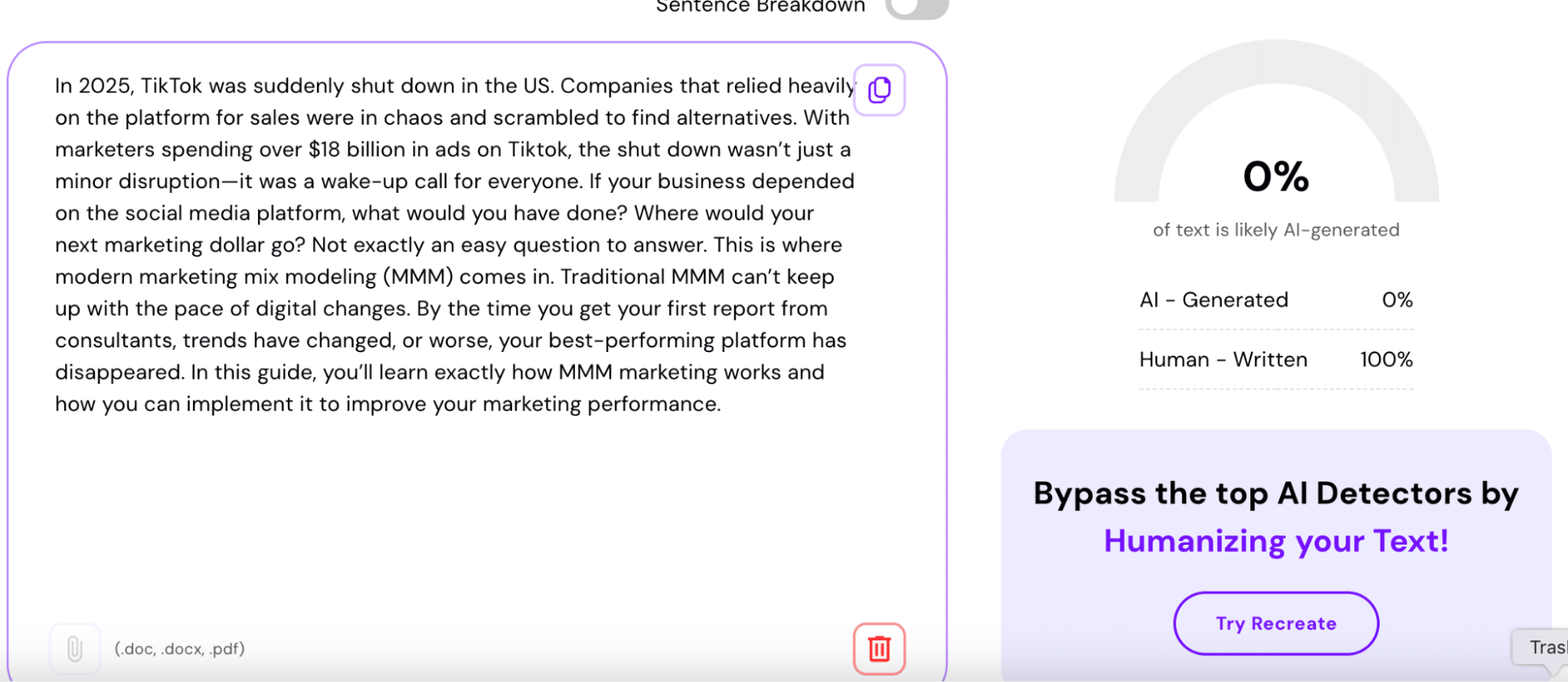

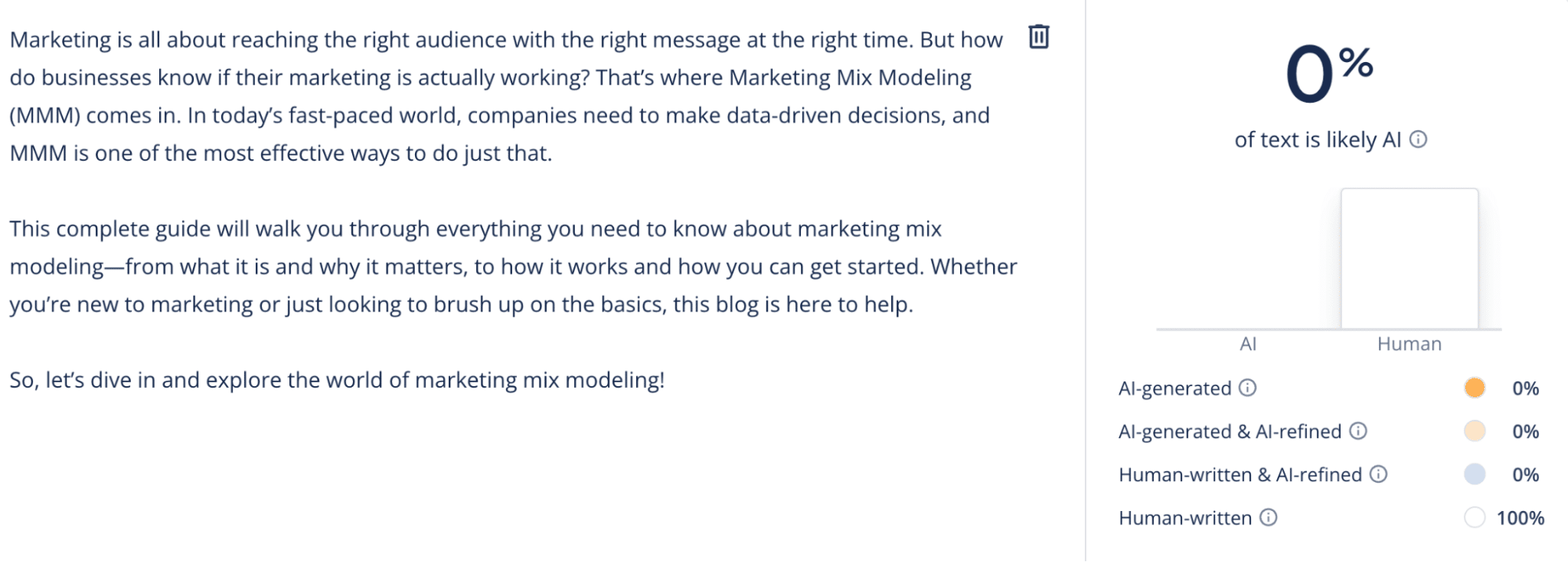

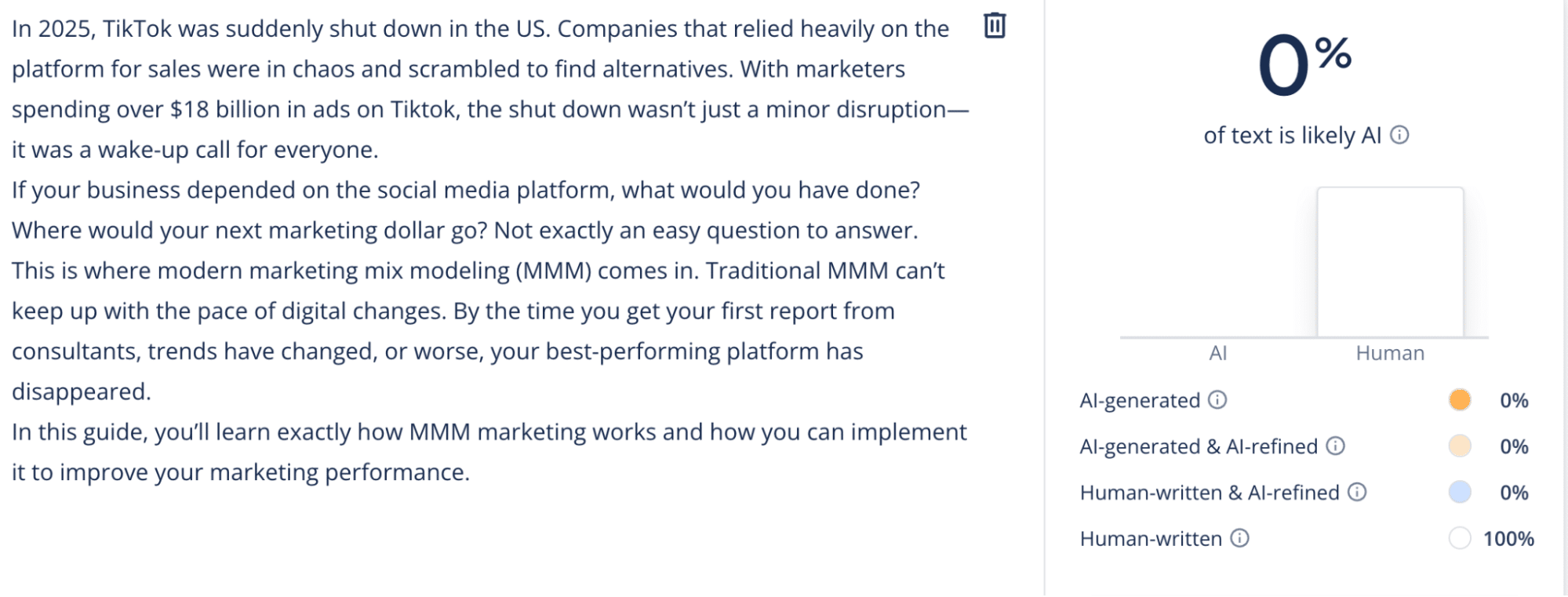

1. Basic human-written content

This text in the image below was written by a human without AI assistance, but it’s not polished or particularly insightful. It contains:

- Simple sentence structures

- Some repetitive phrasing

- No deep analysis, just a straightforward explanation of the topic

Why test basic content?

AI detectors claim to identify “robotic” text patterns. But what happens when a human writes in a basic, formulaic style? Will detectors mistakenly classify it as AI-generated?

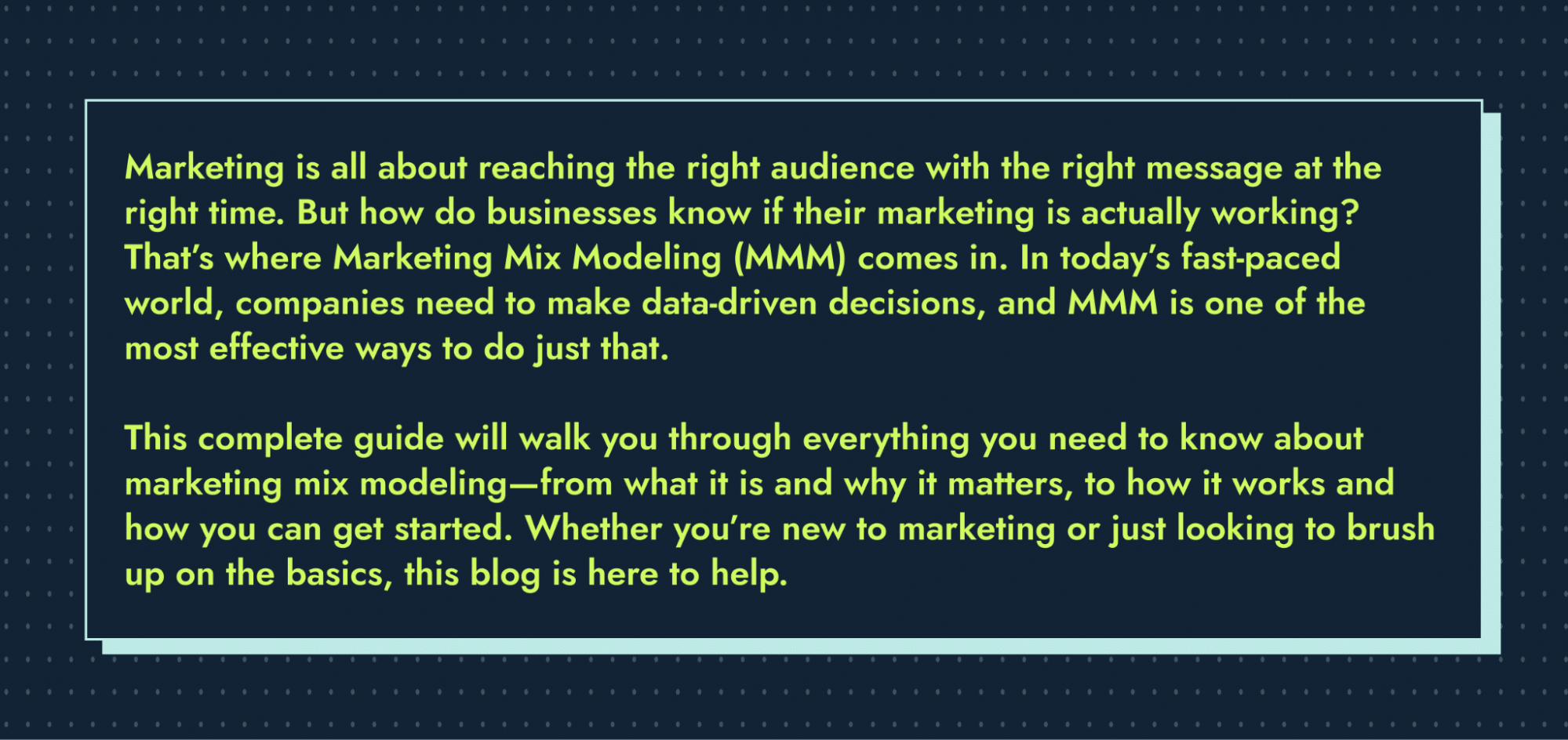

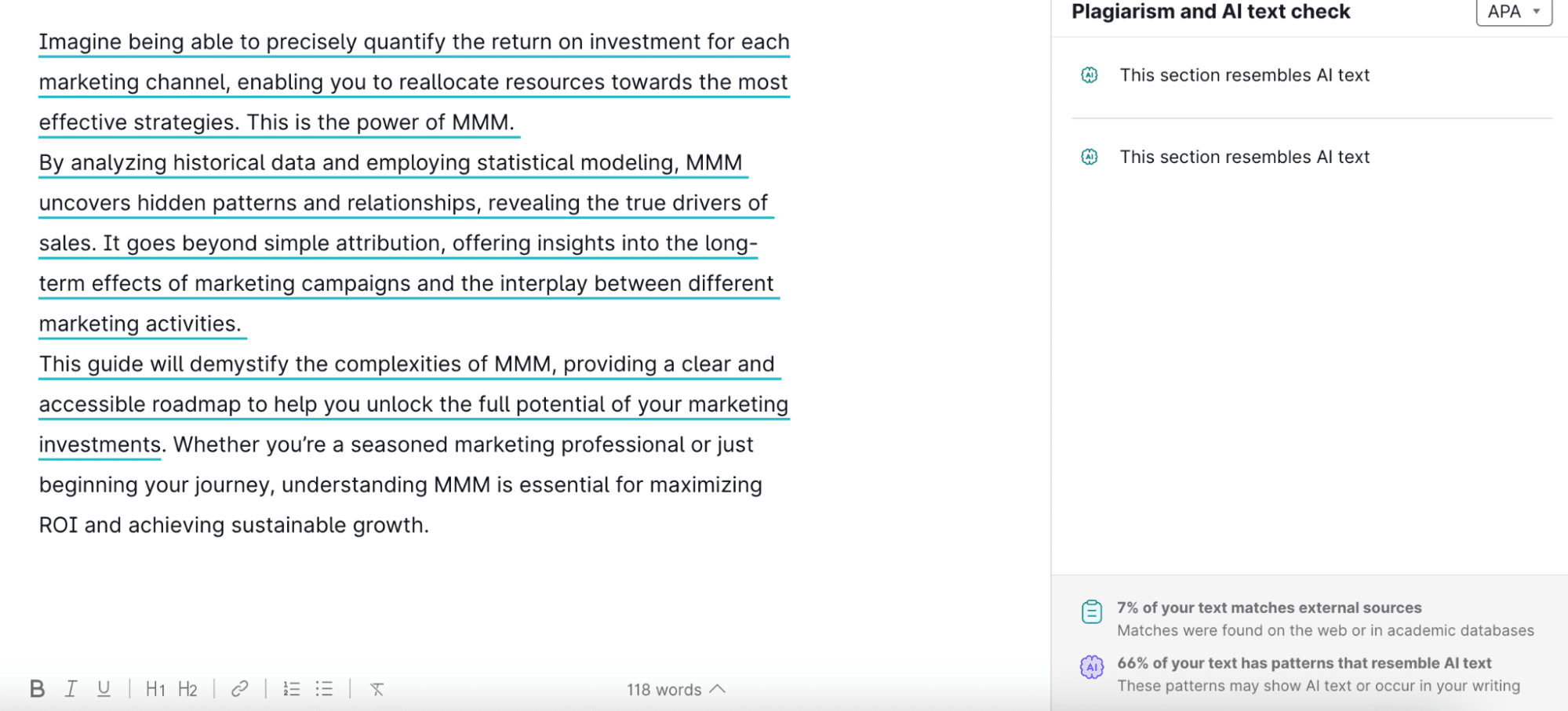

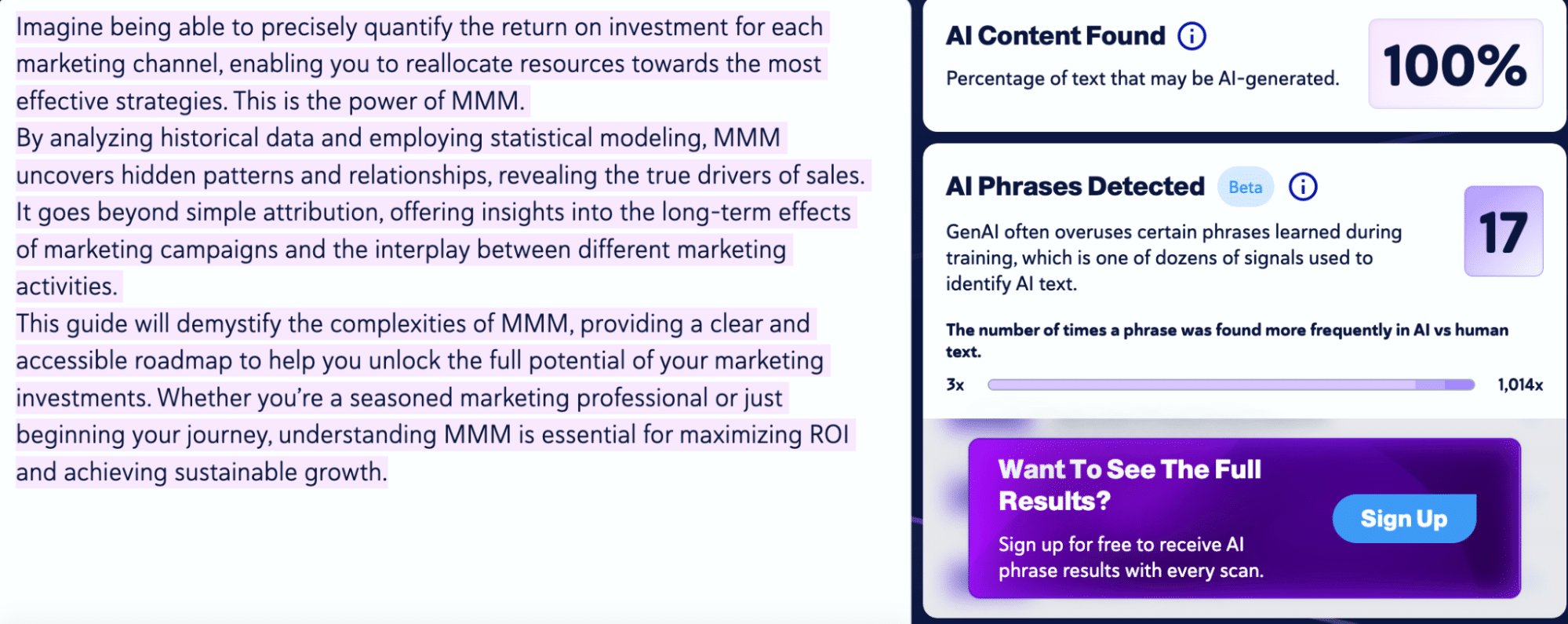

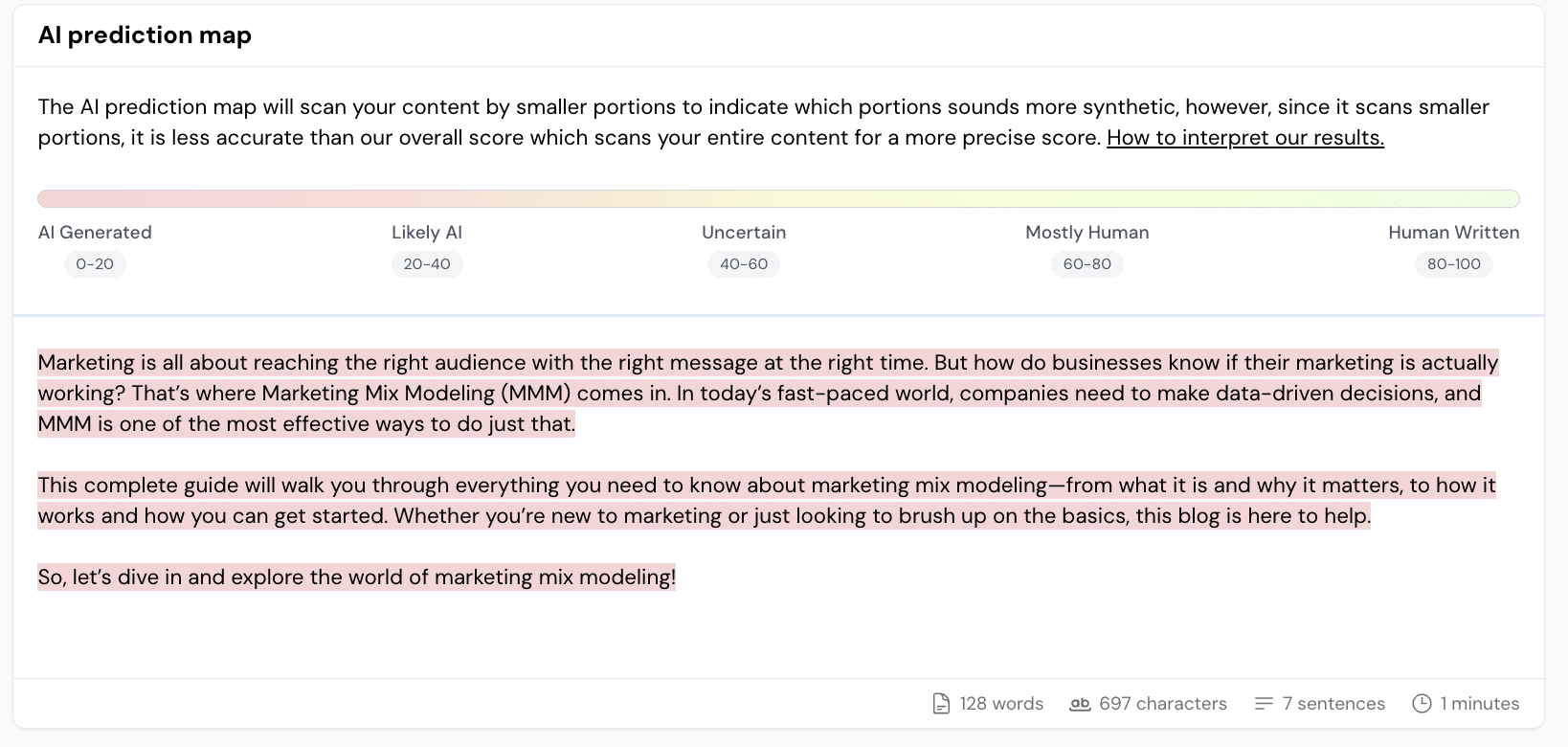

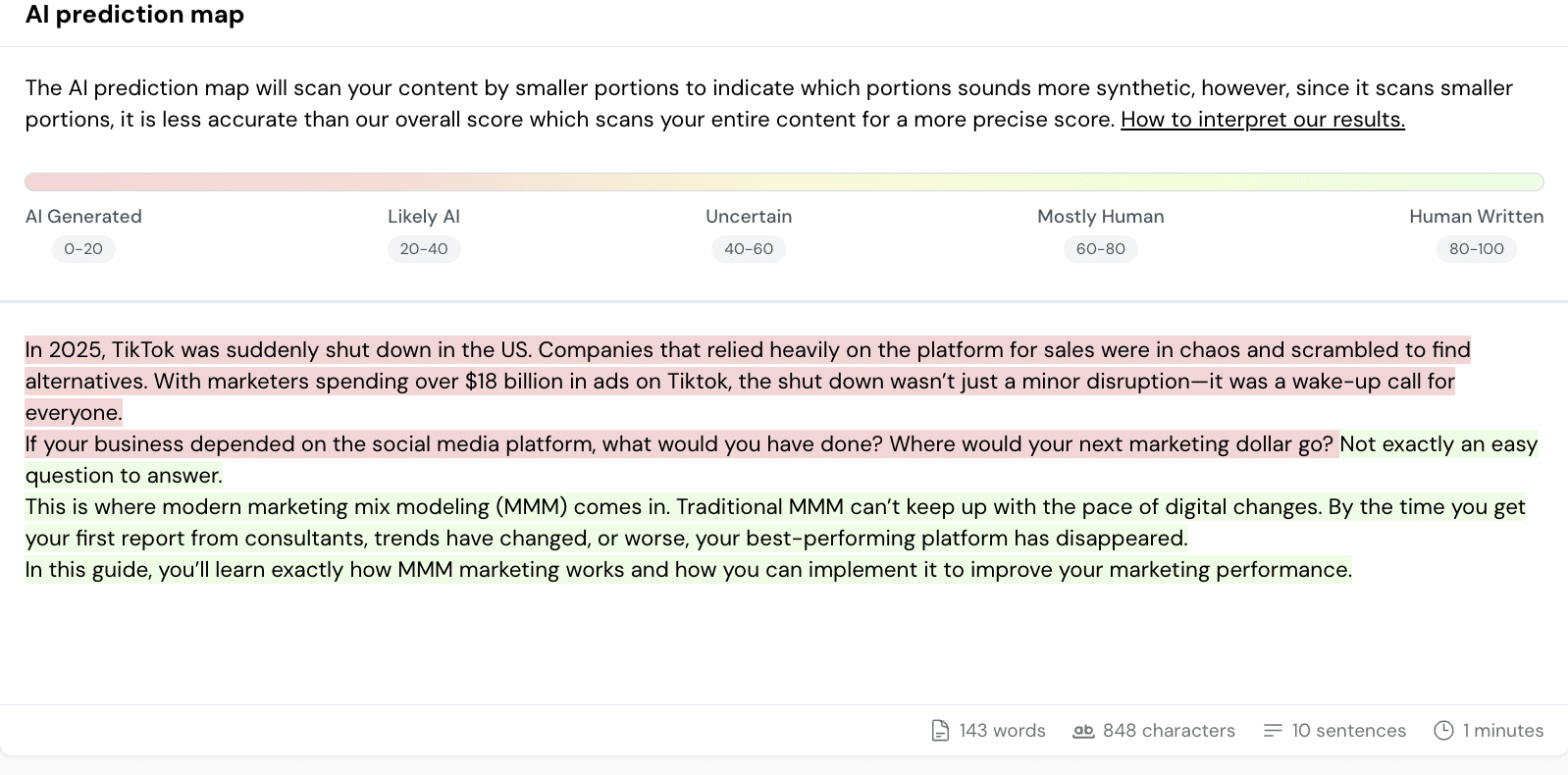

2. Expert-level human writing

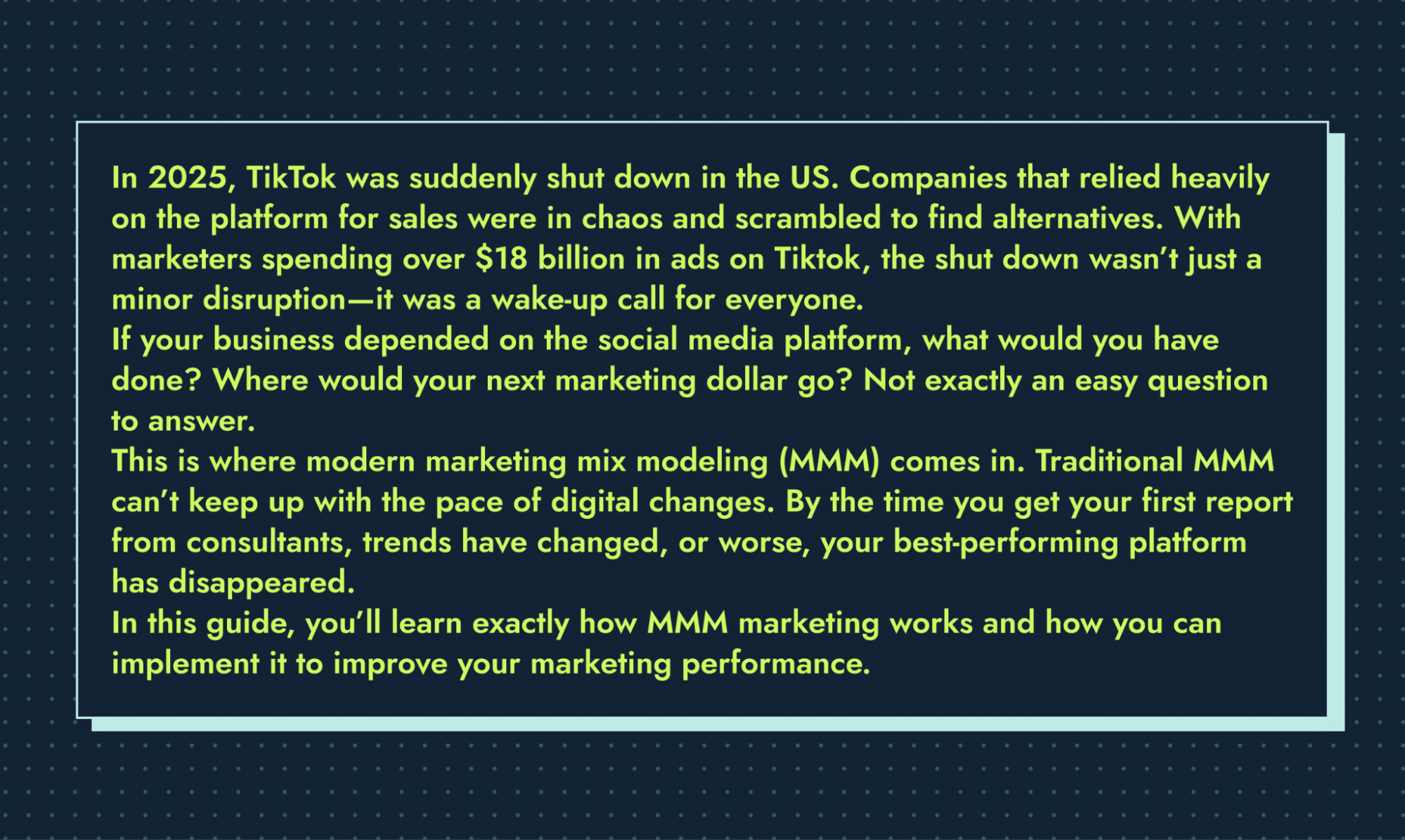

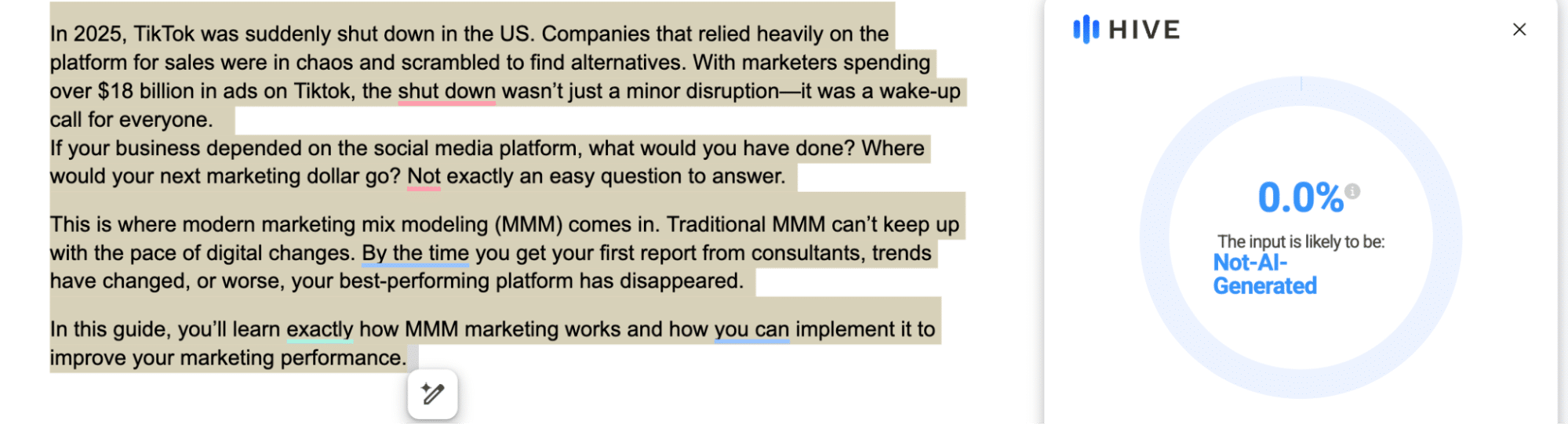

We used the text we had written for a client on marketing mix modeling:

This sample text is fully human-written, but with greater depth, originality and connections to broader concepts. It goes beyond just explaining a topic. It integrates:

- Cross-disciplinary thinking (example: relating AI marketing to psychology, art or historical events)

- Real-world correlations (connecting marketing trends to the latest Super Bowl ad or a cultural shift)

- A distinct human voice (insights, humor, personal experiences)

Why test expert content?

- AI struggles with true creativity and interdisciplinary analysis. While it can generate well-structured text, it often lacks original thought and nuanced connections.

- Testing expert-level human writing helps us see whether detectors can recognize depth and originality, or if they still flag it based on surface-level patterns.

Learn how to create expert content with our proven B2B SaaS content writing process.

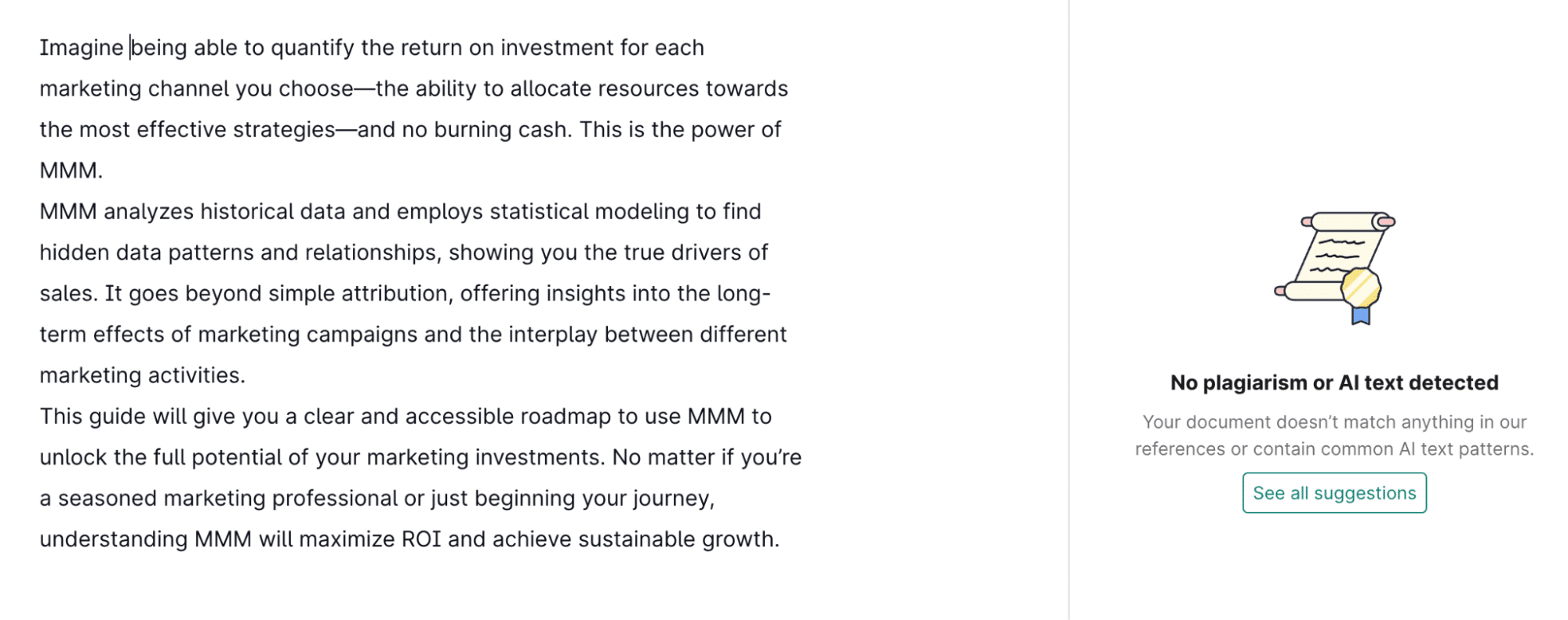

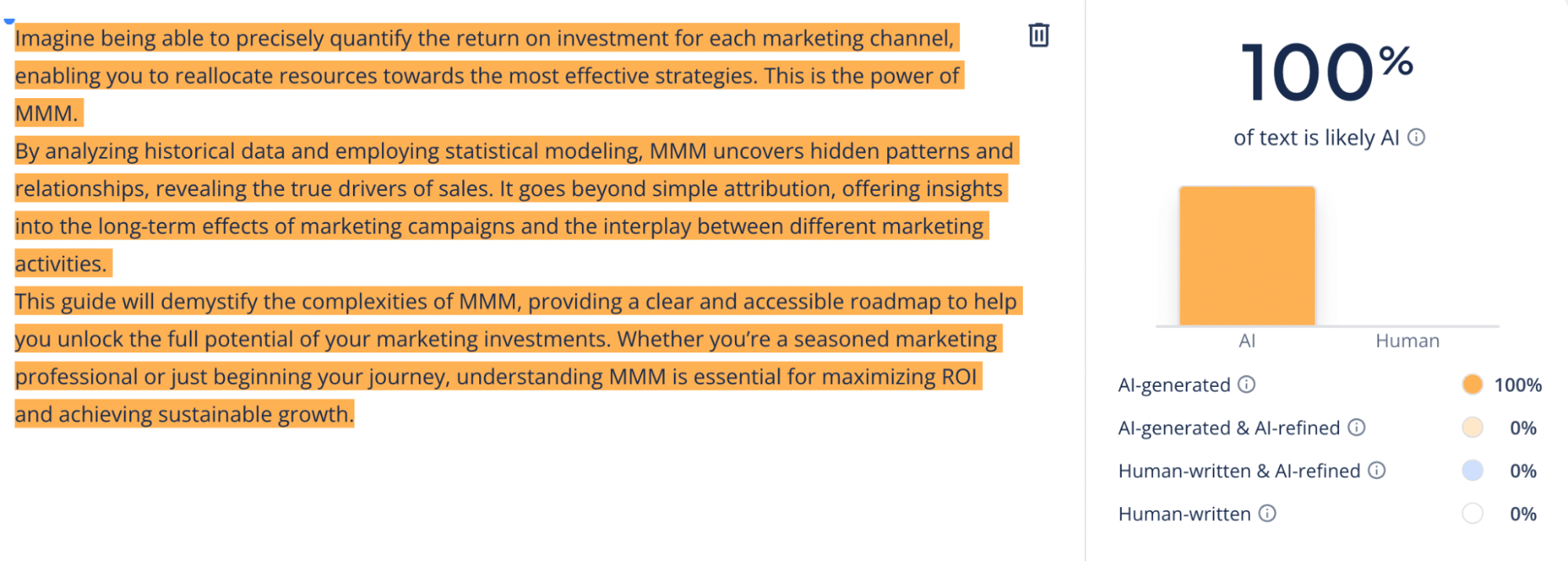

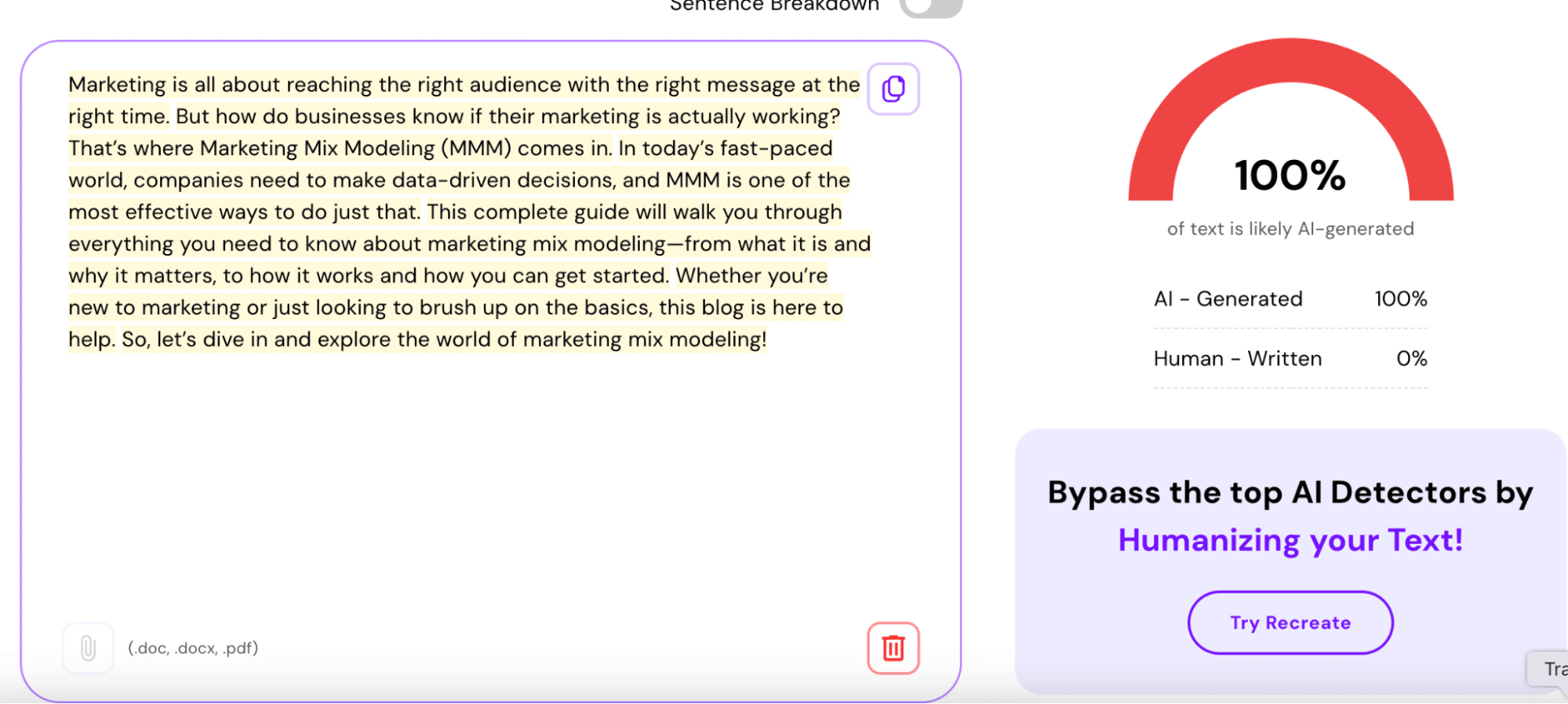

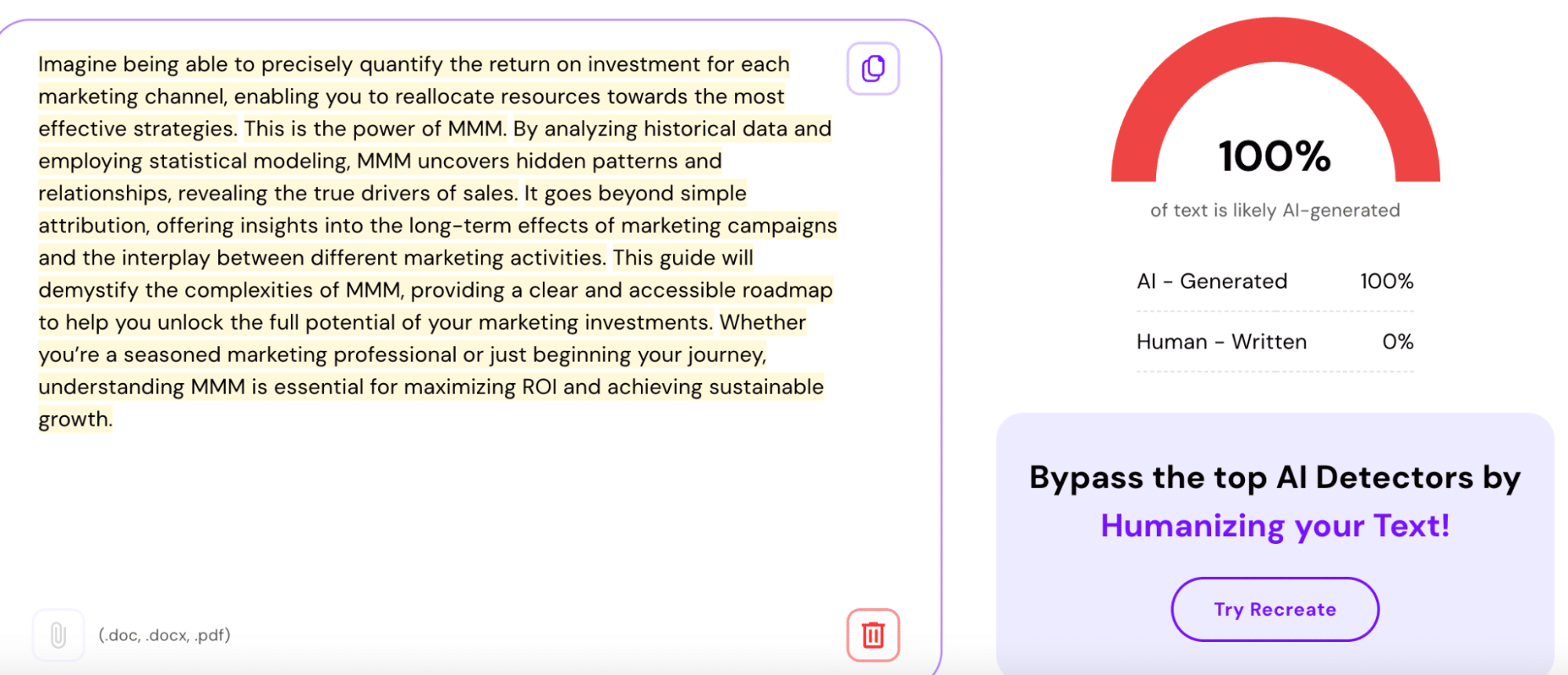

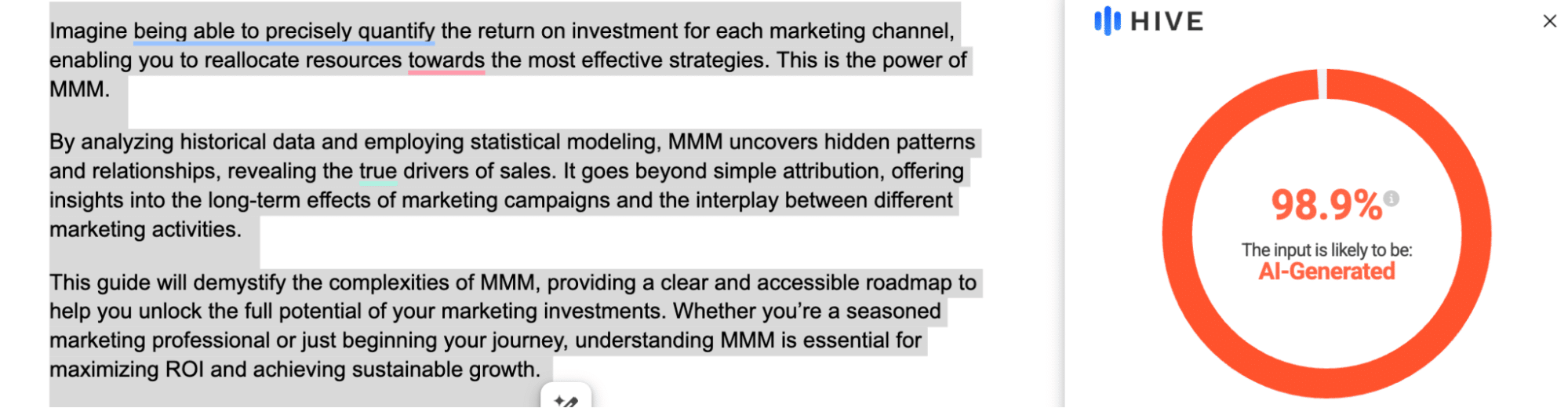

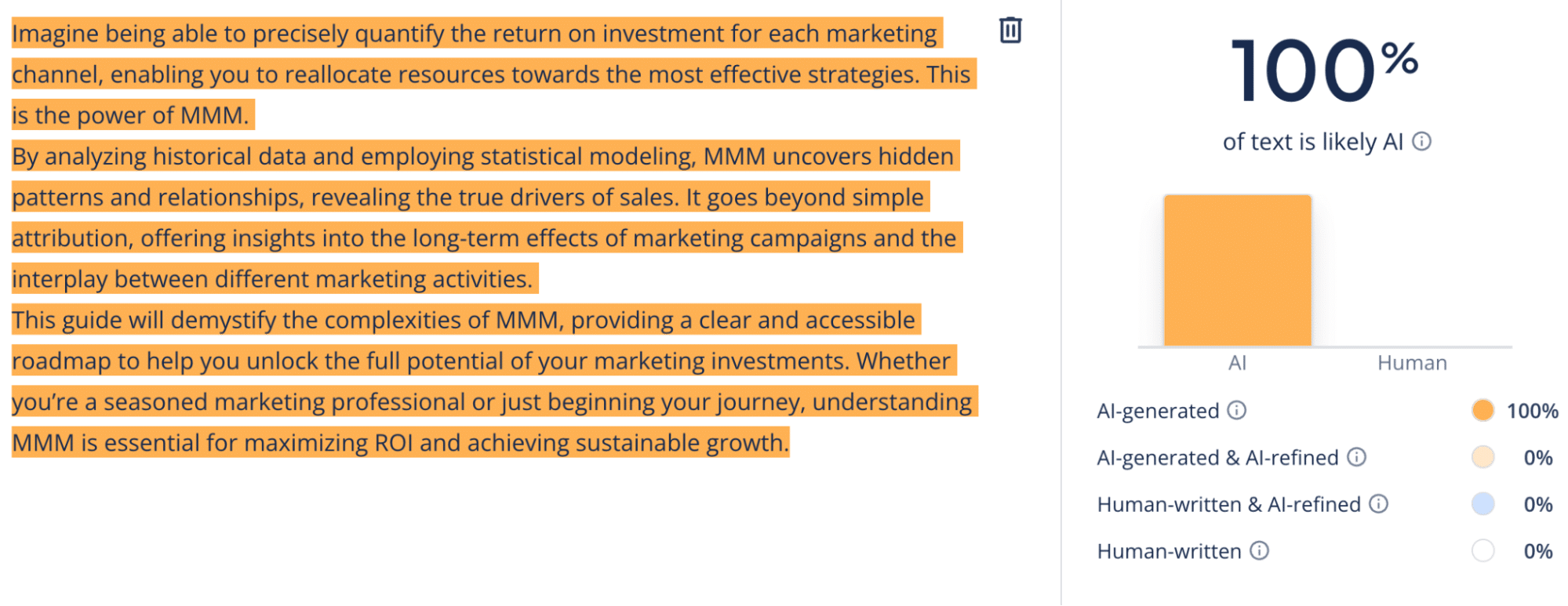

3. Fully AI-generated content

To give AI a fair shot, we generated versions of the text using multiple AI tools to see how they differ in style (using the same brief):

- ChatGPT (OpenAI)

- Gemini (Google)

- Claude (Anthropic)

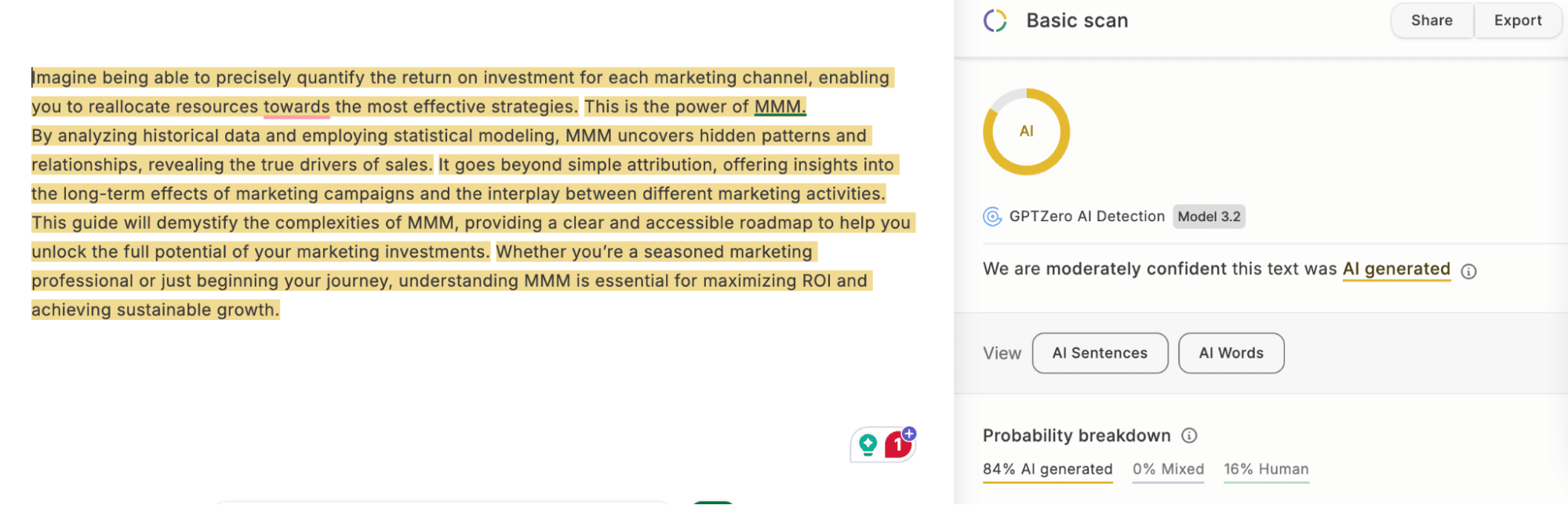

We chose the content below, created by ChatGPT 4.0, as it resembled the most human-like.

Why test AI-generated content?

- Fully AI-written text is the baseline. If a tool can’t catch this, it’s not useful.

- Testing helps us see how reliably detectors identify content created entirely by large generative AI language models.

- It also reveals which tools are sensitive to common AI writing patterns like structure, predictability and phrasing.

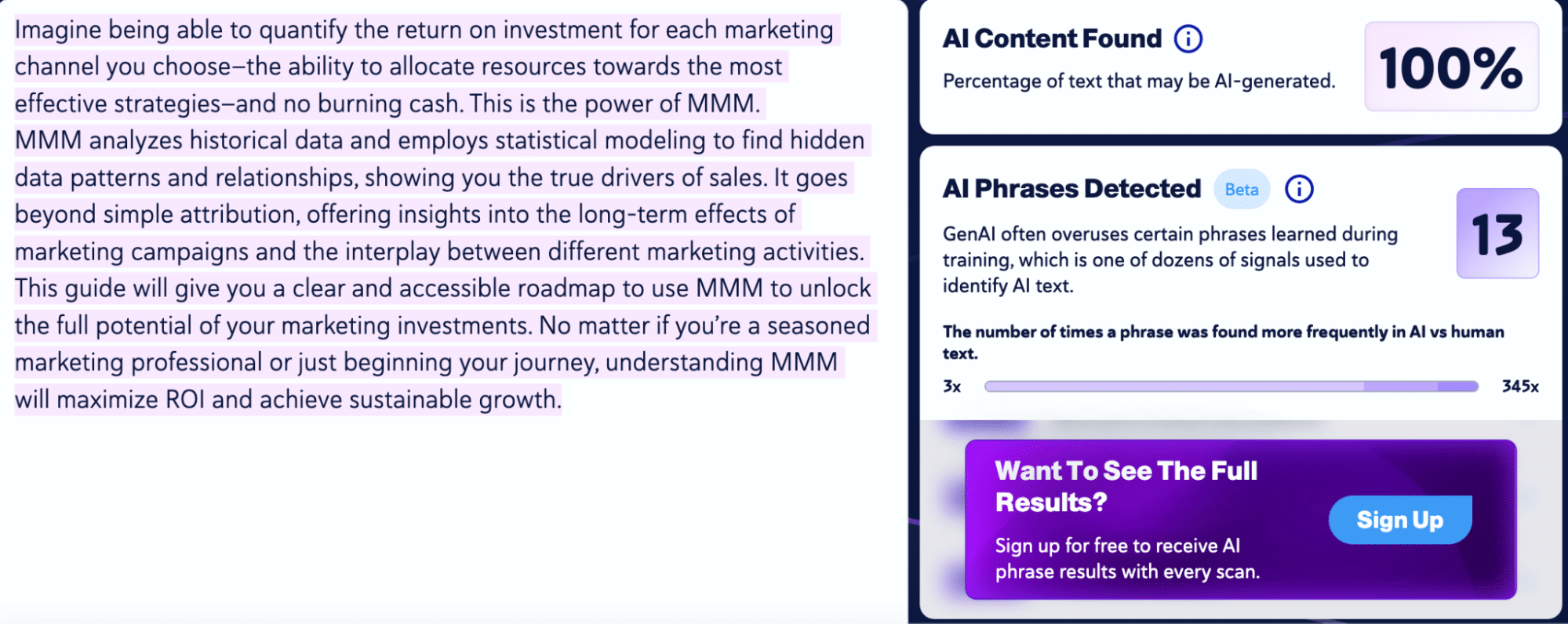

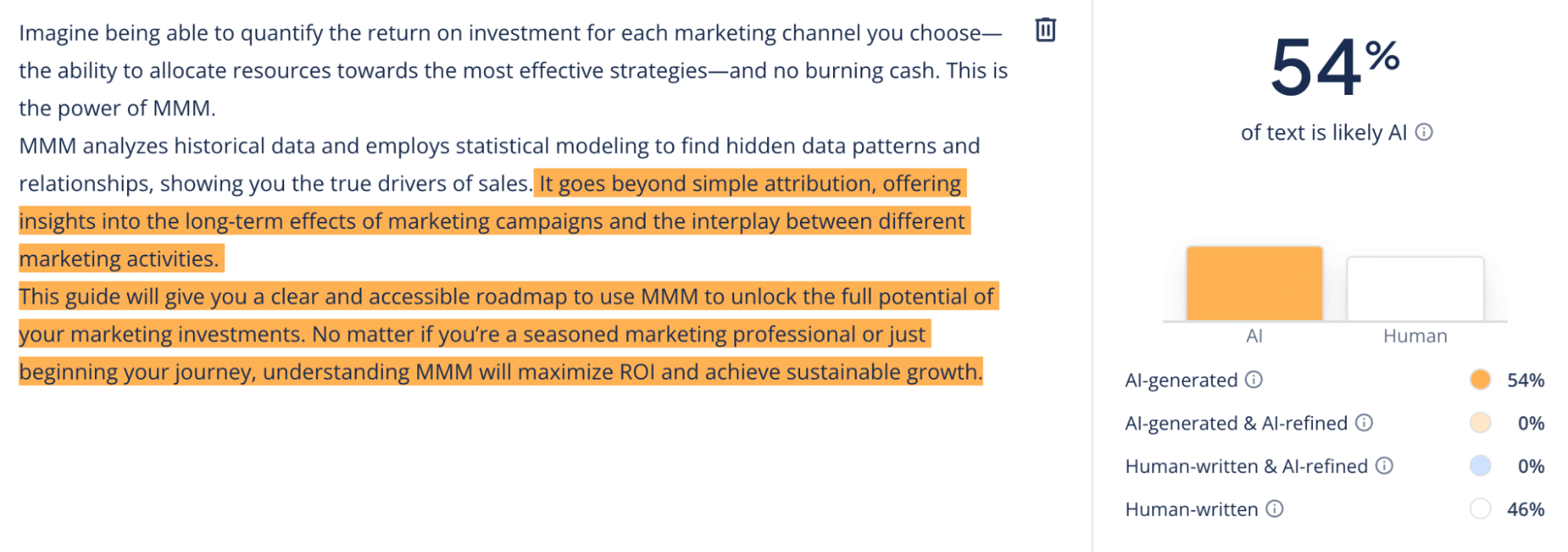

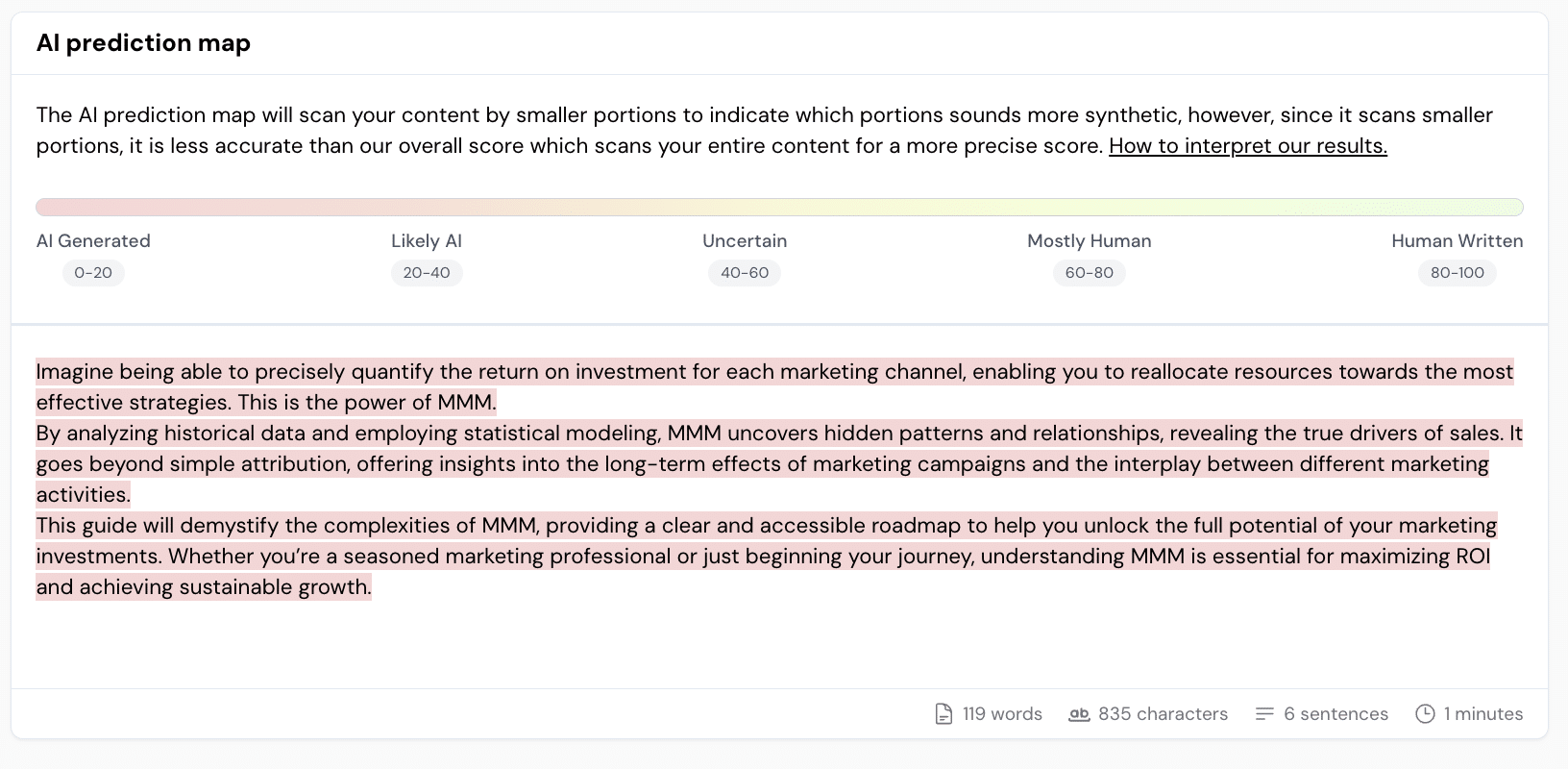

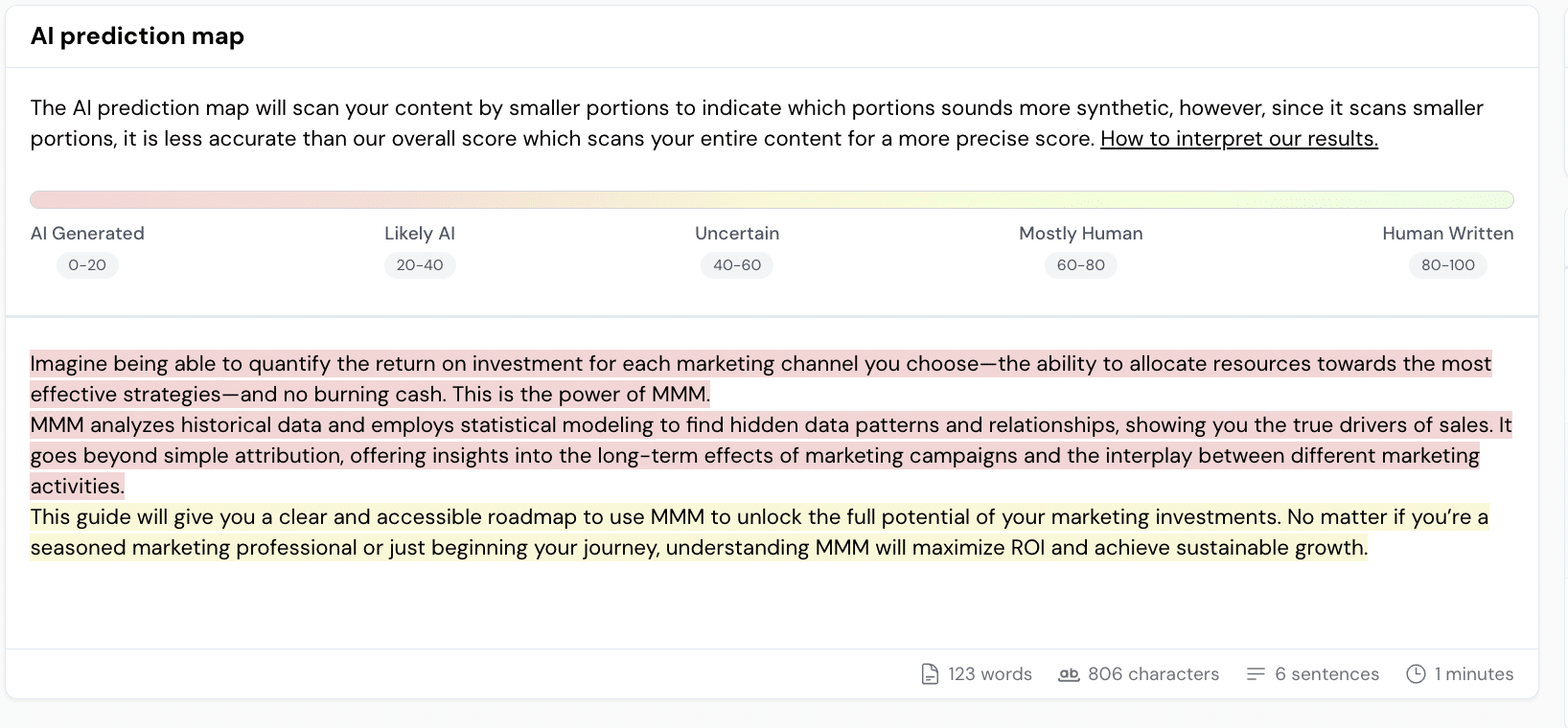

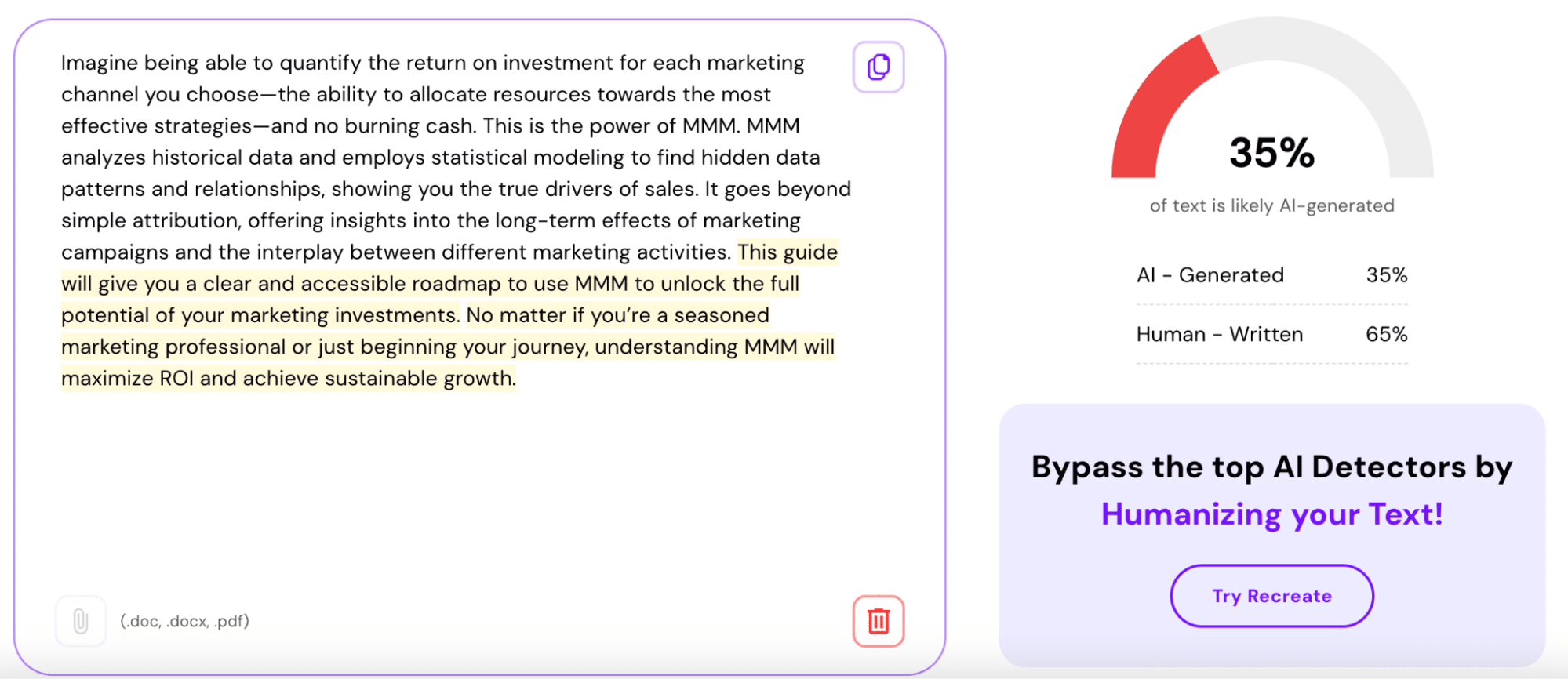

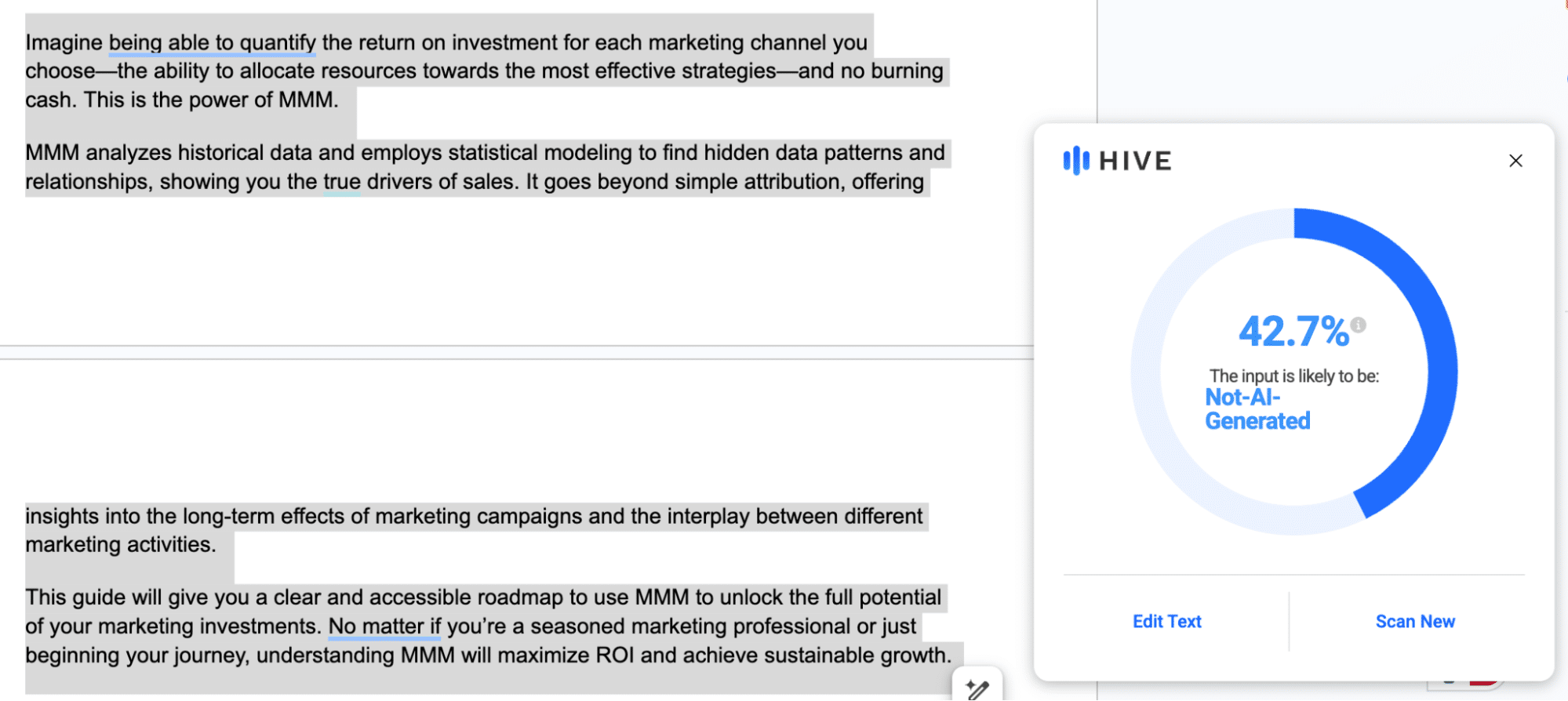

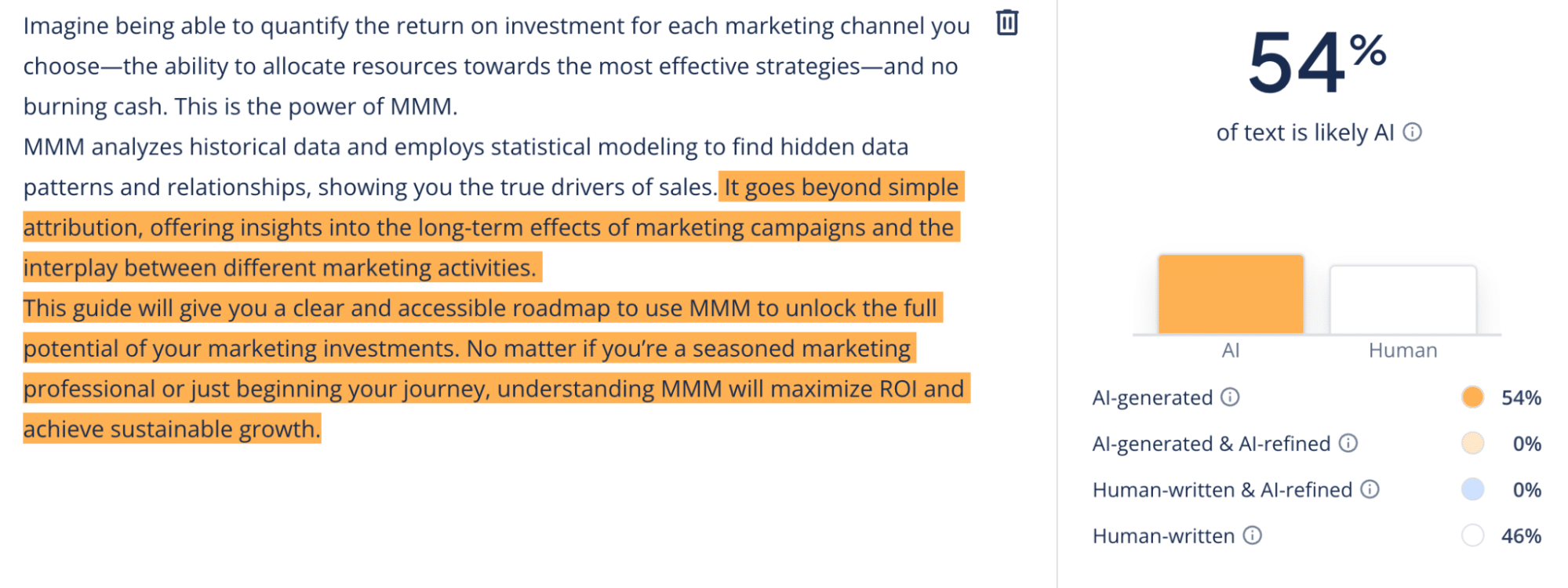

4. AI-generated content, edited by a human

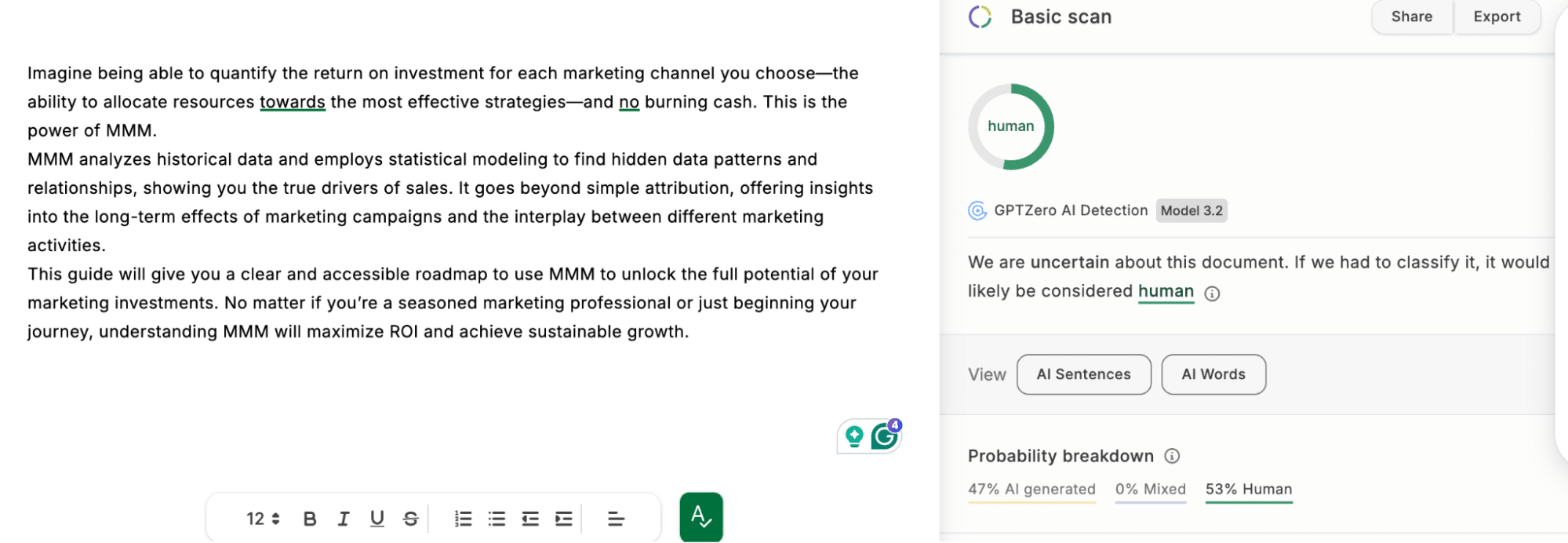

For this version, we started with fully AI-generated content and then a human revised and refined it, making:

- Structural and phrasing improvements

- Stylistic changes for a more natural tone

- Adjustments to sound less robotic while keeping most of the AI-generated content intact

The result:

Why test human-edited content?

- Many professionals use AI as a first draft and edit from there

- We want to see if human editing makes AI-generated content undetectable

- It helps us understand how detectors handle gray-zone content where structure and phrasing have been modified, but the core is still AI.

See how GEO impacts organic traffic based on real data.

Features to look for in AI text detectors

Before we get into how each tool performed, it helps to understand what these detectors actually offer and whether the paid versions are worth it. Most tools give you a free summary percentage that tells you how likely the text is AI-generated. But what that score actually means can vary a lot.

Here’s what to look for when choosing an AI detection tool:

- Overall AI score: This is the percentage you see right away. It tells you how confident the tool is that the content was written by AI. For example, 85% AI means the tool thinks there’s a high chance the text was machine-generated.

- Sentence-level breakdowns: These highlight specific lines that triggered detection. Without this, you won’t know what parts of your content need editing. Some tools offer this for free, others require a paid plan.

- Model identification: A few tools try to name which AI model likely generated the text, like GPT-4 or Claude. This capability is helpful if you’re working with different AI tools or trying to reverse-engineer drafts.

- Sensitivity settings: Some tools let you adjust how strict the detector is. This is useful if your threshold for false positives depends on the use case, for example, academic writing vs. marketing content.

- Clarity and feedback: Look for tools that explain why content was flagged, not just that it was. Tools with vague labels and unexplained scores don’t help you improve anything.

So, is it worth paying for extras?

- If you’re doing a quick check on a corporate blog post or email, free tools like GPTZero or Quillbot are fine.

- But if you’re managing external writers, publishing at scale or auditing content for SEO, you’ll want a tool that goes deeper.

Top 9 AI detection software tested

For each AI detection software, we evaluated:

- How it works (what methods it uses to detect AI-generated text)

- What’s included in the free version vs. paid version, along with the cost

- How accurate and reliable it is based on our tests

Here’s a quick overview of our AI detection test results:

| AI detection software | Accuracy score | Detection behavior |

|---|---|---|

| Quillbot | 9/10 | Best balance; accurate AI detector without overflagging |

| TraceGPT | 8.5/10 | Very accurate; strong on both AI and mixed content |

| GPTZero | 7/10 | Reliable AI checker for clear AI, but overflags simple human writing |

| Winston AI | 7/10 | Decent on mixed content, but flagged basic human writing heavily |

| Smodin | 6/10 | Inconsistent; some good catches, weak on human-edited AI |

| Originality.ai | 5/10 | Overly aggressive; almost everything flagged as AI |

| Copyleaks | 5/10 | Flags too much; struggles with nuance |

| Hive | 5/10 | Overflags simple writing; decent on expert content, mixed on edited AI |

| Grammarly AI Detector | 4/10 | Very inconsistent; flagged basic human writing as AI, let edited AI pass |

1. GPTZero

Quick verdict: GPTZero is reliable for catching fully AI-generated essays or reports, but it overflags simple human writing. Great for schools but too rigid for marketers.

How does GPTZero work? GPTZero uses two core metrics to detect AI:

- Perplexity: Measures how “surprising” the word choice is based on a language model’s expectations. Low perplexity = more predictable (and more likely AI).

- Burstiness: Measures variation in sentence length. Human writing tends to vary (short-long-short), while AI is often more uniform.

The idea: humans write with inconsistency and idiosyncrasies. AI doesn’t (unless it’s been prompted to fake that).

Where it fails: GPTZero sometimes flags simple human writing as AI because it’s predictable or lacks variation, not because it is AI.

Why it matters: These two metrics work well for essays or long-form content but break down in short-form copy, edited content or polished corporate writing that mimics AI tone.

Free GPTZero features: Score without sentence-level highlights (you get five free advanced scans)

GPTZero pricing: $14.99 per month for basic AI content detection

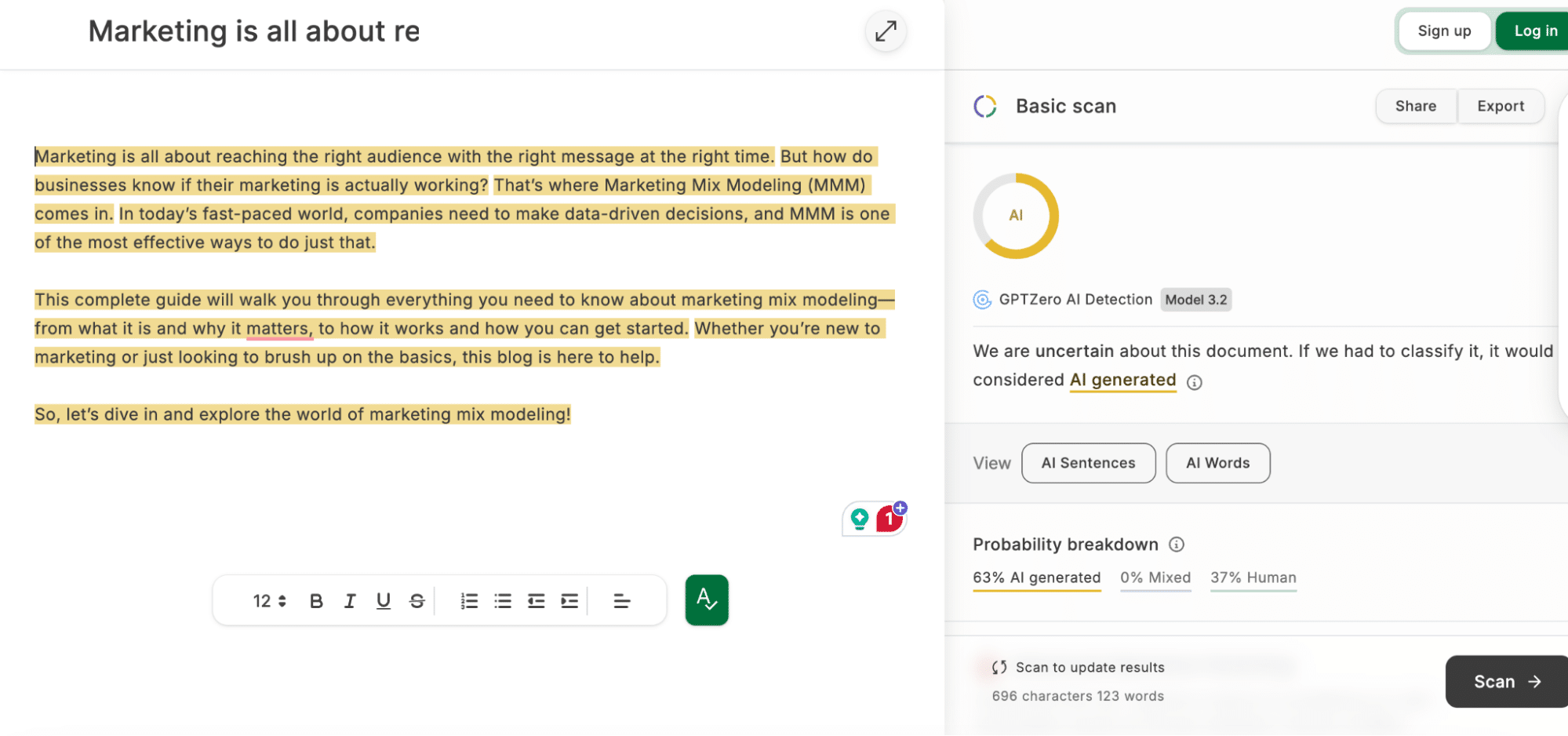

Below, you’ll see how GPTZero responded to the four content types: basic human-written, expert human-written, fully AI-generated and human-edited AI. Each screenshot shows the tool’s score, flags and labels, so you can see exactly what it caught, what it missed and how consistent (or inconsistent) it was.

Basic human-written content: Flagged as AI.

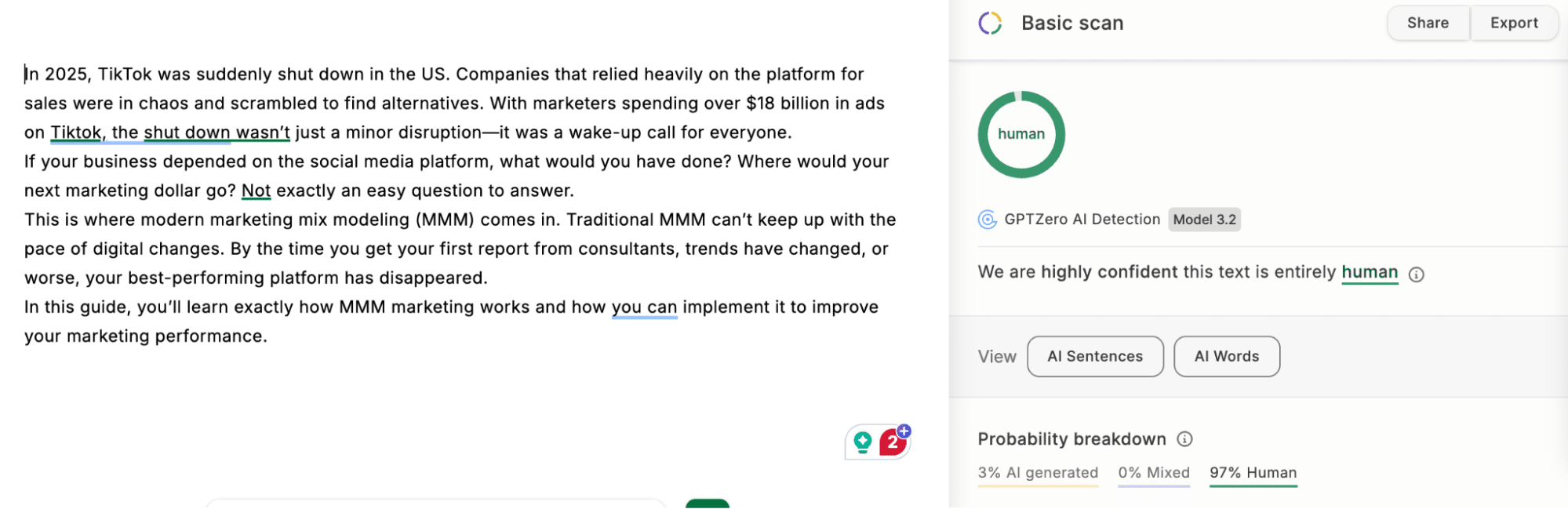

Expert human-written content: Recognized as human by GPTZero.

Fully AI-generated content: Correctly flagged as AI by GPTZero.

Human-edited AI content: Marked as human by GPTZero.

2. Originality.ai

Quick verdict: Highly sensitive detector with customizable risk tolerance. Ideal for publishers who need to catch even lightly edited AI, but overkill for everyday content teams.

How does Originality.ai work? Originality.ai uses a proprietary ensemble model. Here’s what we know:

- Originality scans your content sentence-by-sentence and applies multiple classifiers (likely trained on GPT-3, GPT-4 and Claude).

- You can choose between Standard 2.0 (more lenient) and Turbo 3.0 (extremely strict).

Turbo 3.0 will flag any text that resembles AI, even if it’s just edited AI or clear, clean human writing.

Why it matters: Originality is the only tool in the list that gives you risk tolerance control. If you’re a publisher that can’t afford false negatives, you’ll want Turbo. But if you’re an editor who works with AI writing, Turbo is probably too strict.

Free Originality.ai features: Three free scans per day, with a 300-word limit

Originality.ai pricing: $14.95 per month

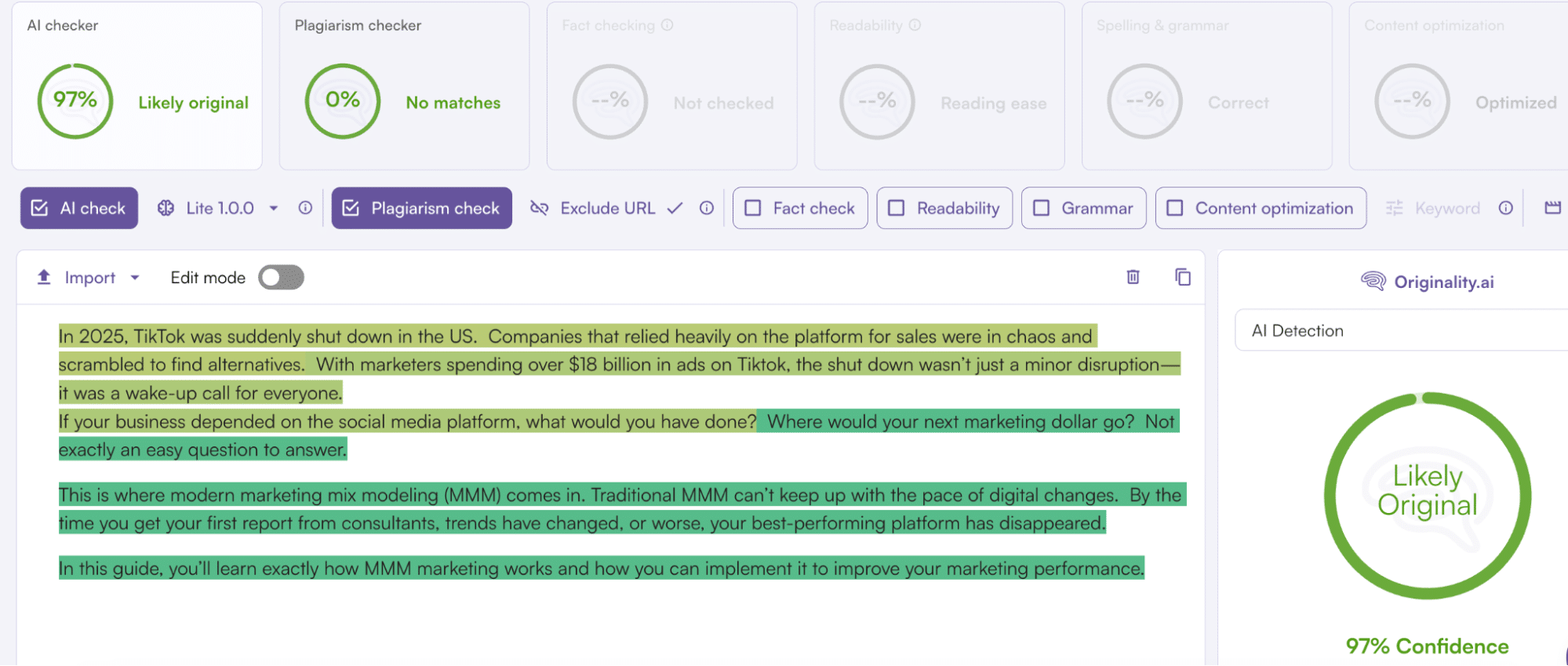

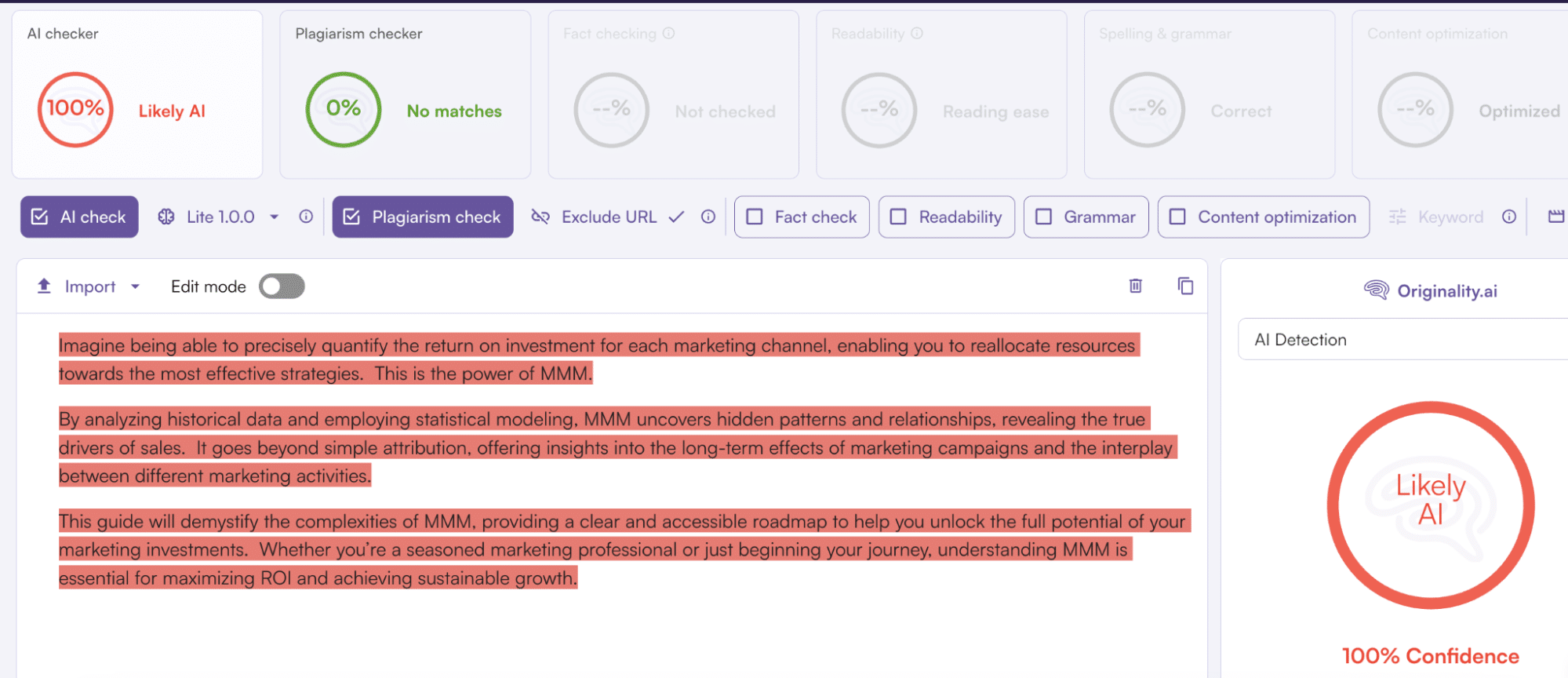

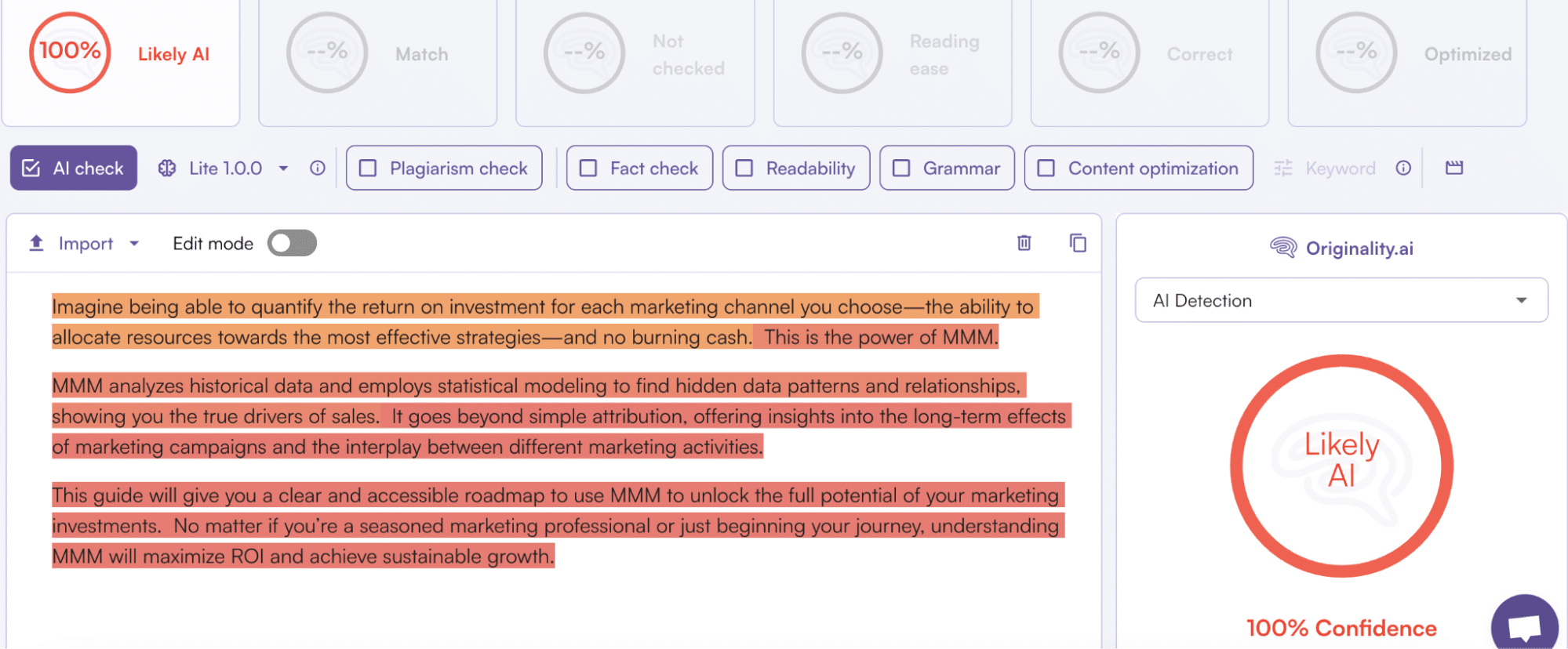

Here’s how Originality responded to the four content types.

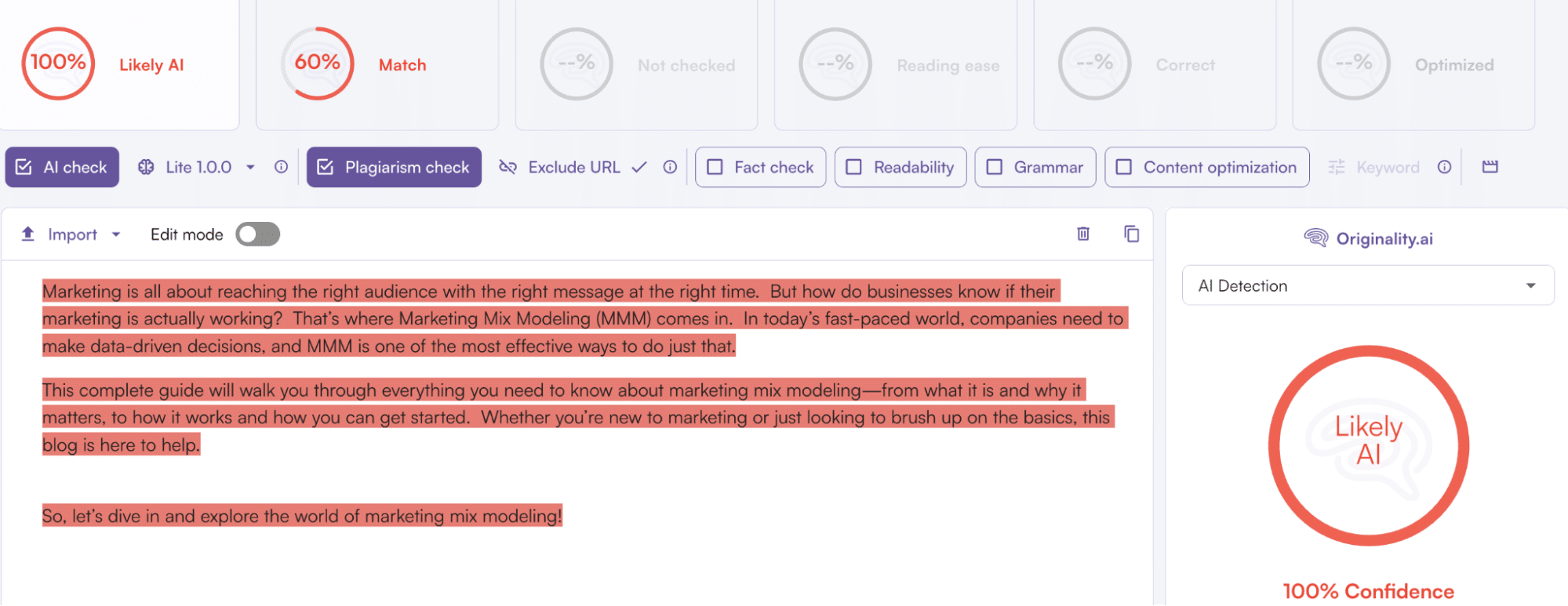

Basic human-written content: Completely flagged as AI.

Expert human-written content: Recognized as human by Originality.ai with 97% confidence. The tool still marked some sentences as “likely AI.”

Fully AI-generated content: Correctly flagged as AI by Originality.ai.

Human-edited AI content: Still flagged as AI by Originality.ai.

3. Grammarly AI

Quick verdict: Good for a quick gut-check while editing in Grammarly, but not detailed or accurate enough for high-stakes content review.

How does Grammarly AI work? Grammarly’s AI detector is built into its writing suite. It uses an internal classifier, likely trained on GPT variants, to identify AI-generated content at a high level.

There’s no burstiness or sentence-level breakdown (in the free version), just a single percentage that tells you how confident Grammarly is that the text is AI-generated.

Why it matters: It’s good for a gut check. But because it’s not transparent and lacks depth, it shouldn’t be used to make publishing or grading decisions.

Free Grammarly AI features: Score only, no breakdown

Grammarly AI pricing: $12 per month ($15/month when using the business plan)

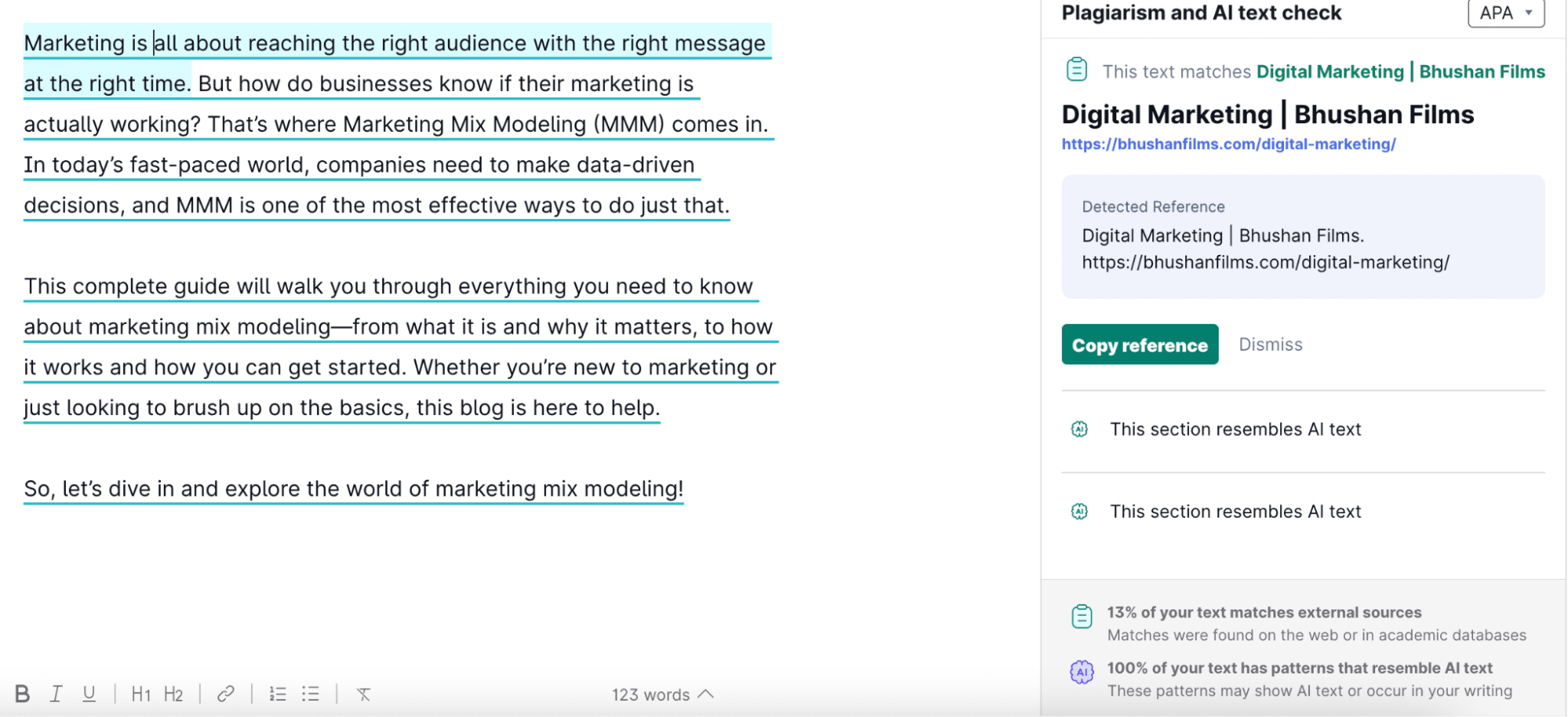

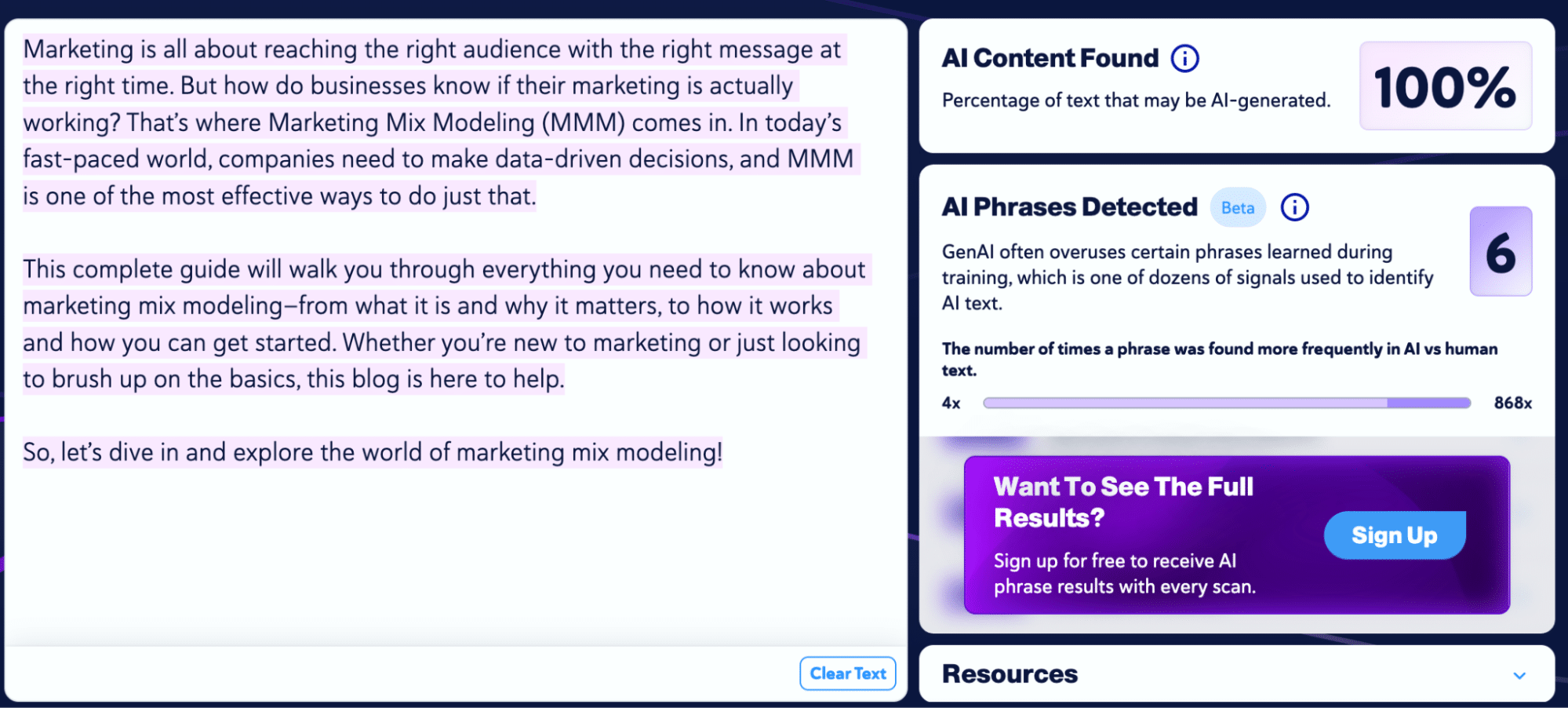

The following images show how Grammarly’s AI content detection feature reacted to the four different types of texts. We used Grammarly’s premium subscription here to show the sentence-level breakdown.

Basic human-written content: 100% of the text was flagged as AI, showing how basic human content can’t bypass AI detectors.

Expert human-written content: Recognized as human by Grammarly AI Detector.

Fully AI-generated content: Partially flagged as AI by Grammarly. This result was interesting as Grammarly marked the entire basic content as AI. However, the actual AI-generated content was only flagged 66%.

Human-edited AI content: Passed as human by Grammarly AI Detector. Again, an interesting result. 66% of the AI-generated content was flagged. And that same content, when edited minimally, passed with flying colors.

4. Copyleaks AI detector

Quick verdict: Strong sentence-level tagging with clear labels like “likely AI.” Useful for editors cleaning up AI-assisted drafts, but can overflag human content.

How does Copyleaks AI detector work? Copyleaks AI detector blends linguistic pattern analysis (like perplexity) with deep-learning models. It gives you:

- A holistic AI probability score

- Sentence-level AI probability tagging (for example, “likely AI,” “possibly AI”)

- Source model classification (e.g., GPT-4, Gemini)

Why it matters: Copyleaks is one of the few tools that tries to identify which model likely generated the content. That’s useful in enterprise or academic contexts.

Free Copyleaks AI features: Score and sentence-level breakdown

Copyleaks AI detector pricing: $7.99 per month

We tested all four of our texts, and this is how they performed on Copyleaks’ AI detection tool:

Basic human-written content: Flagged 100% as AI.

Expert human-written content: Recognized as human by Copyleaks.

Fully AI-generated content: Correctly flagged as AI by Copyleaks.

Human-edited AI content: Still flagged 100% as AI by Copyleaks.

5. Quillbot

Quick verdict: Quillbot AI detector delivered the most balanced results, accurately detecting AI without overflagging clean human writing.

How does Quillbot AI detector work? Quillbot is a paraphrasing tool first. Its AI detection feature is an add-on. It gives a top-line judgment based on probability scores but doesn’t explain how those scores are calculated.

Based on behavior, it likely uses a combination of:

- Fluency scoring (checking for formulaic phrasing)

- Statistical probability based on known LLM outputs

Why it matters: Because Quillbot is built to rewrite content, it can also help you remove AI patterns. If a draft passes its own detection model after being rewritten, that’s a good sign that the content is safe to use. But as a standalone detector, it lacks depth and transparency.

Free Quillbot features: Score, along with sentence highlights

Quillbot pricing: $8.33 per month with a three-day money-back guarantee

Here’s a breakdown of Quillbot’s AI detection model performance:

Basic human-written content: Recognized as human.

Expert human-written content: Recognized as human by Quillbot.

Fully AI-generated content: Correctly flagged as AI by Quillbot.

Human-edited AI content: Partially flagged as AI by Quillbot — one of the few models to do so.

6. Winston AI

Quick verdict: Winston flags the slightest bit of AI pattern in a text, making it an unreliable judge of formal tone and voice.

How does Winston AI work? Winston AI uses a combination of:

- AI pattern recognition (trained on GPT, Claude and Gemini)

- Linguistic complexity analysis

- Optical Character Recognition (OCR) to scan handwriting and scanned documents

Winston AI provides a probability score (0-100%) for AI generation and sentence-level tagging with visual highlights. Its detection thresholds are tuned more conservatively, which helps reduce false positives.

Why it matters: Winston AI is purpose-built for academic and publishing workflows. It integrates with platforms like Google Docs, Google Classroom, Blackboard and Zapier, making it easy to plug into editorial or educational pipelines.

Free Winston AI features: Overall AI probability score with basic explanation

Winston AI pricing: $18 per month for the basic plan. Team plans start at $29 per month.

Basic human-written content: Flagged as AI (2% human score). Winston AI incorrectly flagged simple human writing as AI. The low score suggests it penalized the lack of variation or stylistic complexity.

Expert human-written content: Recognized as human (78% human score). This was the highest human score Winston gave.

Fully AI-generated content: Correctly flagged as AI (0% human score). Winston AI caught this one easily, assigning a 0% human score. No surprise here.

Human-edited AI content: Still flagged as AI (1% human score). Despite editing, Winston still scored it as 99% AI. Even small AI fingerprints were enough to trigger a near-complete flag.

7. Smodin

Quick verdict: Budget-friendly tool for large volumes of AI content detection. Good enough for marketers and students — but less reliable for catching subtle AI edits (we were able to bypass detection with the “human-edited” text).

How does Smodin work? Smodin detects AI using a proprietary multi-model classifier that claims to support content generated by:

- ChatGPT (GPT-3.5, GPT-4)

- Claude

- Google Bard (Gemini)

The tool measures likelihood of AI and provides a confidence percentage. You get sentence-level breakdowns and flagging, but there’s no explanation of methodology or training data.

Smodin also includes generative AI detection tools (rewriting, summarizing, translating), making it a hybrid platform.

Why it matters: It’s affordable and allows unlimited detection scans on paid plans. Good for marketers or students who want volume-based AI detection without paying per credit.

Free Smodin features: Limited checks per day

Smodin pricing: Plans start at $15 per month.

Basic human-written content: Flagged as AI. Smodin misclassified the basic human-written text as AI. The clean structure and simplicity likely triggered a false positive.

Expert human-written content: Recognized as human. Smodin correctly identified the expert-level content as human. It handled nuance, varied phrasing and original tone without overflagging.

Fully AI-generated content: Correctly flagged as AI. The tool accurately flagged the AI-generated draft, catching standard machine-written patterns.

Human-edited AI content: Scored 35% AI. Smodin partially flagged the human-edited content. A 35% AI score shows it picked up on residual AI structure but also acknowledged human intervention.

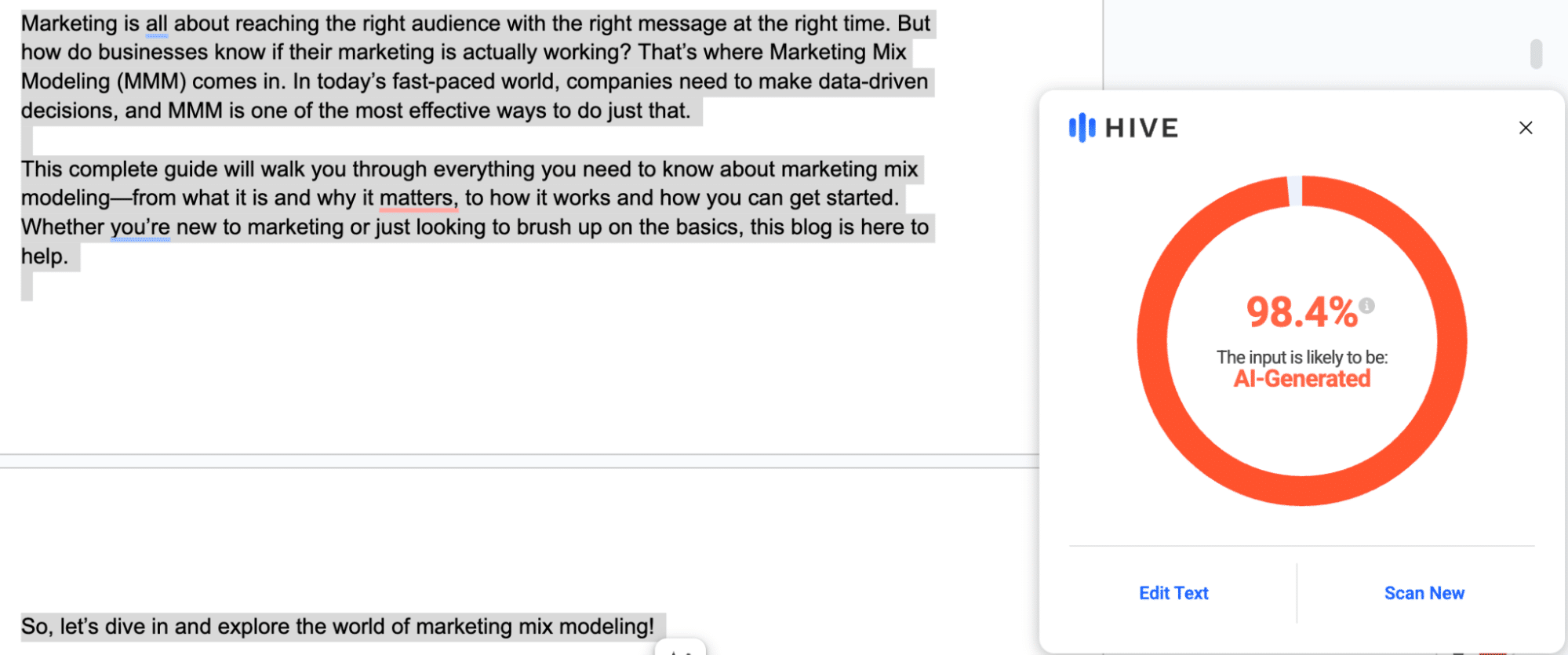

8. Hive

Quick verdict: Fast and free detector that comes with a browser extension. Great for casual scanning, but it missed basic human content in our tests.

How does Hive AI work? Hive’s AI detection tool is part of its broader moderation suite and was initially built to flag toxic or unsafe content. For text analysis, Hive relies on:

- Sentence structure modeling

- Pattern frequency analysis

- Probabilistic modeling tuned to common LLM outputs

Hive gives you a top-line probability score (0–100%) with a binary decision: likely or unlikely AI-generated. No sentence-level explanation or source LLM prediction is provided.

Why it matters: Hive is incredibly fast. Use it for quick gut checks, not high-stakes evaluations.

Free Hive features: Score only

Hive pricing: Free for basic use (custom pricing for bulk usage)

Basic human-written content: Flagged as AI (98.4% likely AI), suggesting Hive penalized simplicity and lack of variation.

Expert human-written content: Recognized as human (0% AI), correctly identifying it as human-written with strong confidence.

Fully AI-generated content: Correctly flagged as AI (98.9% likely AI).

Human-edited AI content: Partially flagged as AI (42.7% likely AI). A 42.7% AI score shows Hive detected both human edits and leftover AI patterns.

9. Scribbr AI detector

Quick verdict: Scribbr AI detector is the same as Quillbot, owned by Learneo.

How does Scribbr AI detector work? Scribbr and Quillbot both share the same underlying AI detection model. So when you run content through either tool, you’re essentially getting the same detection logic and results.

The difference comes down to audience and context:

- Quillbot is designed for rewriting, paraphrasing and general content editing, commonly used by students, marketers and casual users.

- Scribbr is focused on academic integrity. It’s part of a larger platform used for proofreading, plagiarism checking and citation management in universities and research settings.

So while the detection tech is the same, the branding, user experience and surrounding features are tailored to different use cases. If you’re already working within the Scribbr ecosystem, the AI detection feature is a convenient add-on, not a standalone tool meant to compete with Quillbot.

Why it matters: If you’re working in an academic setting and already using Scribbr for citation or proofreading, the built-in AI detection makes it convenient.

Free Scribbr AI features: Score and breakdown

Scribbr AI pricing: Free to use

Basic human-written content: Recognized as human. Like Quillbot, Scribbr didn’t overreact to simple phrasing or repetitive structure.

Expert human-written content: Recognized as human. Scribbr handled nuance, original thought and variation well.

Fully AI-generated content: Correctly flagged as AI.

Human-edited AI content: Partially flagged as AI. The detector showed mixed results for human-edited content, similar to Quillbot, which suggests it noticed edits but still caught lingering AI structure.

The results: What is the best AI text detection tool?

Most AI detectors in our test were aggressive, often to the point of being unusable for anything except catching fully AI-generated content.

The reason: Most AI text detection tools don’t actually detect creativity or intent — they uncover patterns. And in this test, that made them overly aggressive with basic human writing, reliable on expert writing and mixed (at best) on human-edited AI

The artificial intelligence detection pattern we saw

| Content type | How most AI detector tools behaved |

|---|---|

| Basic human writing | Frequently flagged as AI (false positives), especially as the writing was simple, repetitive and lacked stylistic flair |

| Expert human writing | Most tools passed expert human content with high confidence — AI detectors trust depth, original phrasing and varied sentence structure |

| Fully AI-generated | All tools correctly flagged complete AI-generated text, as expected |

| Human-edited AI | The "gray zone" — most tools still flagged 35% to 100% of content as AI, even after human editing |

AI text detection tool accuracy: Key takeaways

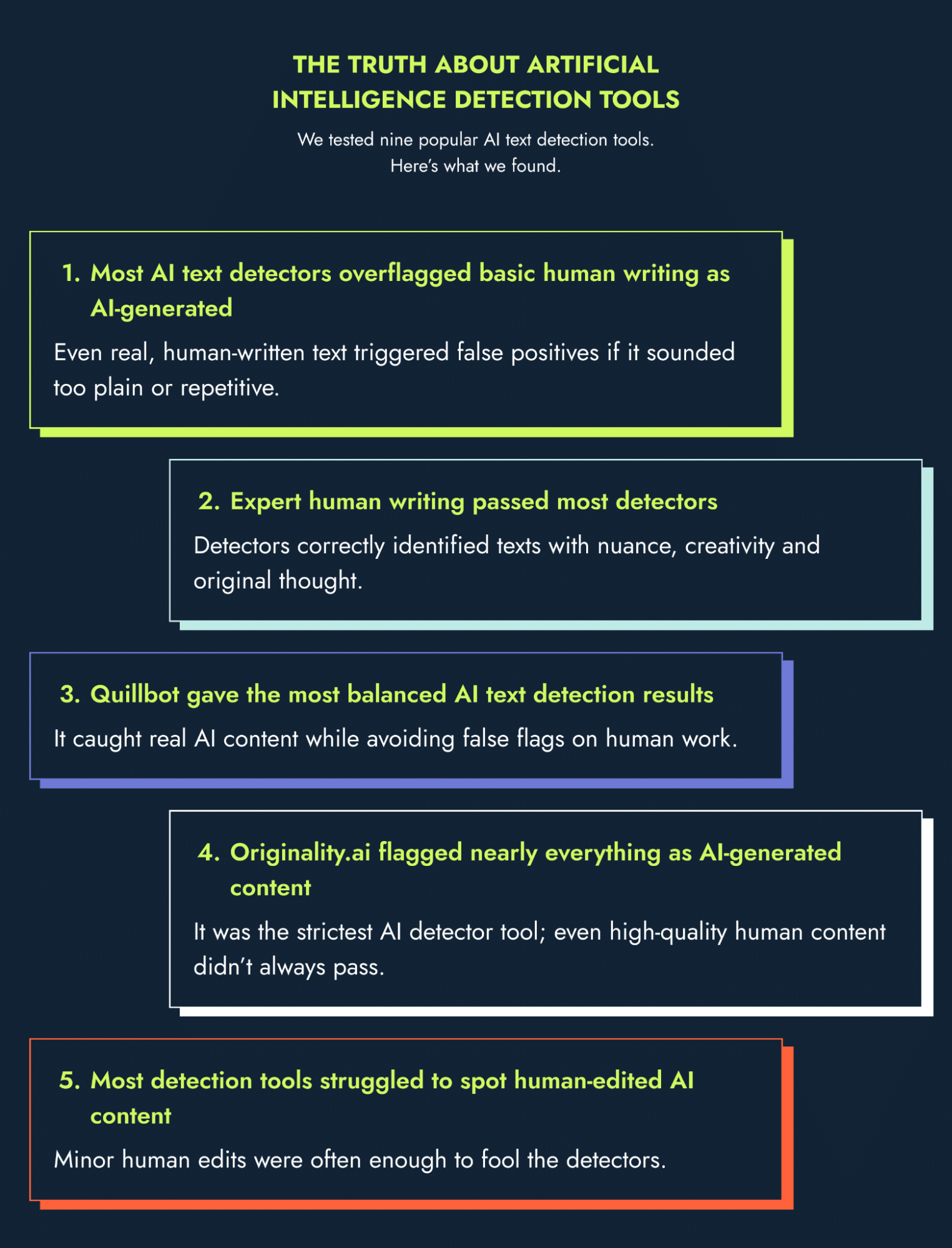

If we could summarize our AI content detection experiment in five takeaways, here’s what we’d say:

1. Most tools overflagged basic human writing as AI-generated

If your writing is clean, simple or formulaic, tools like Originality.ai, Copyleaks, Winston, Smodin and Hive will likely assume it’s AI.

⚖️ Verdict: These tools don’t “understand” writing — they hunt for patterns. Simplicity = suspicious.

2. Expert human writing passed almost everywhere

Varying sentence lengths, original analogies, data references and human nuance reliably passed through detectors like GPTZero, Quillbot, Copyleaks and others.

⚖️Verdict: If your writing is complex, stylistically varied or creative, you’re safe.

3. Quillbot was the most balanced AI detector

Quillbot was the only tool that:

- Trusted both basic and expert human writing

- Flagged fully AI content correctly

- Showed restraint with human-edited AI (54% AI detected — reasonable for mixed content)

⚖️Verdict: Quillbot gets the real-world use case right: it catches AI when it matters, but doesn’t punish clear human writing.

4. Originality.ai was the most aggressive

Originality.ai flagged everything except expert human writing as AI, including basic human writing and lightly edited drafts.

⚖️Verdict: Use this if you’re a publisher or agency with zero tolerance for AI, but expect false positives.

5. Most detection tools struggled with human-edited AI

This is the hardest test. Even after editing for tone, structure and phrasing, many tools still flagged 35% to 100% of the content as AI.

⚖️Verdict: Human editing reduces AI scores — but doesn’t fully erase detectable patterns. Most tools aren’t sophisticated enough to handle this yet.

Are AI detector tools always right? Can they be trusted for high-stakes decisions?

No, AI detectors aren’t always right and can’t be trusted. AI detection software isn’t evaluating creativity, intent or originality. They’re looking for patterns. And patterns exist in all the pieces of text, human or AI-generated.

- Simple writing? Pattern.

- Repetitive phrasing? Pattern.

- Clear structure? Pattern.

That doesn’t automatically mean the content was AI-generated. It just means it resembles the way AI tends to write. That’s why AI detectors should never be treated as absolute proof, especially in education, hiring or legal settings. No tool in our test was sophisticated enough to understand why content was written a certain way; they just flagged what “looked like” AI.

But here’s the flip side.

When a detector flags 40%, 50% or 80% of your content as AI — that’s not just noise. That’s a signal. It means your content probably wasn’t just following patterns; it was likely generated (or heavily assisted) by an AI model. And even if an AI model wasn’t used, it sure means the content reads highly robotic.

- If your score is sitting in that 10-20% range? You can argue about nuance, editing, writing style or overly simple phrasing. That happens with human writing.

- But when you’re looking at 40%+ flagged as AI? It’s not just a coincidence.

Why do you need to remove AI patterns in content?

You need to remove AI patterns in your content to maintain quality. Removing AI patterns isn’t about avoiding detection — it’s about raising the bar. Content that sounds AI-written often fails before it’s flagged. It doesn’t build trust. It doesn’t convert. And it won’t rank.

If a detector flags your content, it usually means your content is:

- Repetitive

- Lacking originality

- Robotic

- Predictable

And if your content feels robotic, your audience will bounce, regardless of whether it was created by humans or bots. Google will notice, too. Search engines are getting smarter at rewarding real depth and penalizing formulaic content.

Note: Google doesn’t penalize AI-generated content, but it rewards engaging content.

AI patterns in your text signal:

- To readers: The content feels hollow, like it was written to check a box, not to inform or connect.

- To search engines: The page doesn’t add anything new, and could be low-quality.

- To editors or clients: You didn’t put in the effort. It reads like filler.

The fix: Replace surface-level statements with original thinking. Use real examples. Write how you speak. Say something worth reading.

The flipside: Generative AI tools make content creation faster. If you want to use AI, but make sure the content isn’t too “basic,” learn how to avoid AI writing patterns.

How easy is it to fool AI content detectors?

It’s pretty easy to fool AI content detectors with light edits. In our test, human-edited AI content passed several detectors. Just changing structure, rephrasing sentences or adding a more natural tone lowered the AI score.

Most tools are still not sophisticated enough to detect well-edited AI. They focus on patterns, not intent. If your edits break those patterns, the content often passes.

That’s why detection tools should never be the only check. A polished AI draft can look human enough to slip through.

Create AI-proof high-ranking content with Productive Shop

AI detection tools aren’t perfect. They won’t catch creativity. They won’t understand context. But they’re good enough to catch pattern-heavy, repetitive or AI-assisted content — and that’s exactly what they’re built for.

Especially if you’re writing to specialized audiences like B2B SaaS, buyers can easily tell when content feels robotic, generic or lacks depth. Content that sounds too AI-driven fails to build trust and credibility. Search engines reward content that’s original, authoritative and genuinely useful, not copy-pasted AI fluff or SEO-stuffed filler.

At Productive Shop, we help technology companies write B2B content that never reads like it came from a machine. Everything we write is designed to perform: original, sharp and built to rank.

Ready to create AI-proof content your audience actually trusts?

Frequently asked questions

How do AI detection tools work?

AI detectors work by detecting patterns in your writing. They use machine learning models to measure things like:

- Perplexity: Predictability of your words and phrases

- Burstiness: Sentence length and structure variation

- Repetition: Number of times phrases and sentence structures repeat

- Fluency: Estimate of how polished or “machine-like” the writing sounds

Most tools compare your text against known patterns from large language models like GPT-3 or GPT-4. They don’t read for meaning or originality. They score based on how much your writing looks like what AI usually produces.

Are AI detection tools accurate?

No, AI detection tools aren’t always accurate. They are good at spotting patterns but not context. They don’t understand meaning or creativity, and often only catch fully AI-generated content and weak edits. But they also flag simple human writing and can miss well-written AI text. Use the score as a signal, not as final proof.

What is the most accurate AI checker?

Quillbot is the most accurate AI checker, as evident from our experiment. It correctly identified fully AI-generated content and human-edited AI content; plus, it didn’t overflag simple human writing. This tool strikes the right balance: sensitive enough to catch AI patterns, but smart enough to trust human nuance.

Did any AI text detection tools flag human writing incorrectly?

Yes. Most of the tools flagged the basic human-written content as AI-generated.

This tells us: AI detectors aren’t judging creativity or intent — they’re judging patterns. And “too simple” often gets mistaken for “too robotic.” However, expert-level human writing — with nuance, depth and original phrasing — consistently passed through almost every AI text detection software.

Did human-edited, AI-generated content fool any AI detectors?

Human editing partially fooled the AI detectors.

Human editing (changing structure, improving phrasing, adding a more natural tone) lowered the AI score across most tools. But very few tools were fully convinced that the text was human after editing.

For example, Quillbot and Smodin were the most forgiving, scoring human-edited AI content around 35-54% AI. Other tools like Originality.ai, Copyleaks, Winston and Hive still flagged 99-100% of human-edited content as AI, despite clear improvements.

The takeaway? Light edits can reduce AI detection scores, but don’t expect editing alone to “erase” AI patterns entirely. Detectors are still pretty good at spotting underlying structure or style fingerprints from language models.

Were there inconsistencies among AI text detectors?

Absolutely! There were inconsistencies among different detectors, especially on content that wasn’t black-and-white.

Tools like GPTZero and Quillbot showed more restraint on human writing and human-edited AI content. On the other hand, Originality.ai, Copyleaks and Winston went all-in on flagging anything that even smelled like AI, including simple human text.

Some tools (like Grammarly’s AI Detector) even struggled with consistency inside the same test, flagging basic human content as 100% AI, but letting lightly edited AI content pass as fully human.

What these findings mean: Accuracy isn’t just about catching AI; it’s about knowing when not to flag content. That’s where most tools failed.

Why do AI content detection tools give different results?

AI content detection tools give different results because the detection isn’t standardized. Every tool has its own definition of what AI-generated content “looks like” — and that’s exactly why results vary so much.

Most AI text detection tools aren’t actually detecting “AI” in the way people think. AI detectors don’t read for meaning or creativity. They scan for patterns.

Some tools are trained mostly on content from specific models like GPT-3 or GPT-4. Others use stricter language models, scoring systems or thresholds to decide what “looks like” AI-generated text. That’s why you’ll see huge differences between tools:

- Some flag simple human writing as AI because it’s clean or repetitive.

- Others pass lightly edited AI content because the structure looks human enough.

- Some tools, such as Originality and Scribbr, are tuned for educational use (more sensitive), while others, including Smodin and Hive, are built for content marketing (more forgiving).

Do I need a paid AI text detection tool?

Not always. Free tools like Quillbot and GPTZero are enough for quick checks. If you’re reviewing content in bulk and frequently run out of the free checks, a paid tool might help. If you’re paying for the tool, it should be because of convenience, and not because of an advertised “better model.”

Can I trust paid tools for AI-generated text detection?

No, paid AI detection tools aren’t always more accurate. You’re mostly paying for extra features, not guaranteed results. Some tools, like Originality.ai, are strict and can overflag human content. Others, like Grammarly, are too relaxed and miss obvious AI. Use paid tools if you need more control or advanced options, but always review the results yourself.

How accurate is Grammarly AI detection?

Grammarly’s AI detection is inconsistent at best. It’s fine for a quick check, but it’s not reliable enough for high-stakes review or content publishing decisions. It’s better as a secondary tool alongside a more robust detector.

In our test, it flagged 100% of basic human writing as AI-generated, but completely passed human-edited AI content without detecting anything. It also only caught 66% of fully AI-generated content.

That tells us Grammarly’s AI detector is heavily pattern-based but lacks nuance. It tends to overflag simple, straightforward writing as AI while missing lightly edited AI content that sounds more natural.

How accurate is Scribbr AI Detector?

Scribbr’s AI detector is reasonably accurate for everyday content screening. It’s one of the few tools that doesn’t overflag everything. But like most AI text detectors, it’s not perfect for high-stakes academic integrity decisions without human review.

Scribbr’s AI detector is actually powered by Quillbot. That means when you use Scribbr’s AI detector, you’re really getting Quillbot’s AI detection technology, just in Scribbr’s interface.