Agentic AI is an artificial intelligence system designed to work toward specific goals on its own, combining reasoning, planning and action. It’s not just automation with a fresh coat of paint. It’s technology that can adapt to changing conditions and coordinate work across tools.

At a time when Gartner predicts that 30% of Fortune 500 companies will deliver service through a single AI-enabled channel by 2028, the stakes for understanding this technology have never been higher.

So, this guide breaks it all down for you. You’ll learn:

- What is agentic AI?

- How AI agents work

- What makes agentic AI systems different from assistants and scripts

Key highlights:

- An AI agent is a software system that acts autonomously toward goals. It doesn’t wait for prompts like an AI chatbot or assistant.

- AI agentic systems operate using core components like memory, reasoning models, decision logic and feedback loops to complete multi-step tasks.

- Agentic AI solutions are already being used across SaaS: from lead routing and CRM updates to campaign optimization and internal ops workflows.

- Agentic AI requires strong oversight, clear boundaries and the right tech stack. But when designed and used properly — like the systems built by Productive Shop — it can significantly reduce manual workload.

Agentic AI definition: What exactly is an agentic AI system?

An agentic AI is a software system that can autonomously perceive its environment, make decisions and act toward a defined goal, without needing step-by-step instructions from a human.

In simpler terms, an artificial intelligence (AI) agent doesn’t just respond to commands — it figures out what to do next based on context, data and its objective.

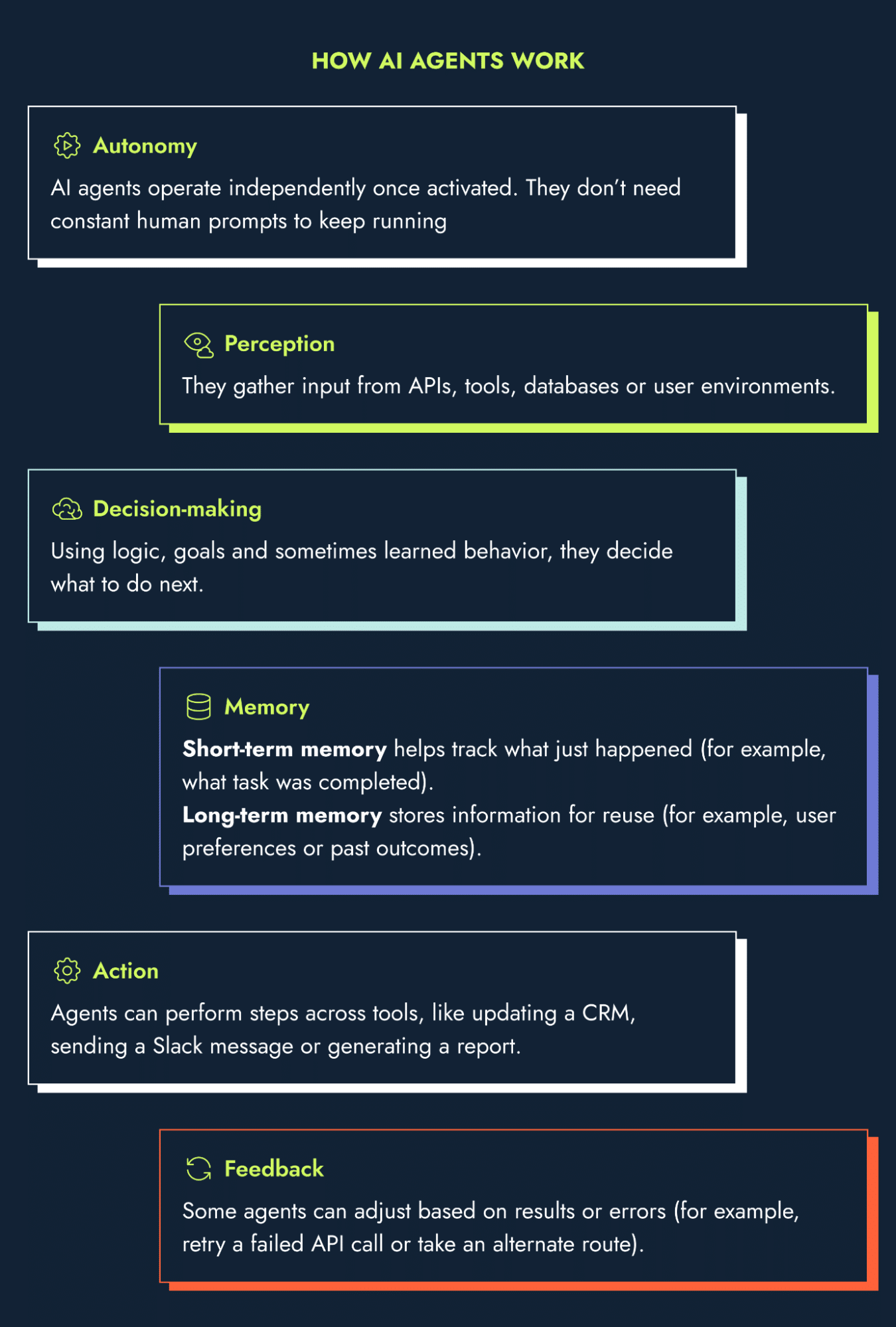

How do AI agents work? Core components

AI agents work through a cycle of perception, decision-making and action — all tied to a specific goal. Unlike basic automation or prompt-driven tools, agents aren’t limited to waiting for input. Depending on how the system is designed, they can observe their environment, process data, make decisions and execute tasks on their own.

Here are the core components of AI agents that make this possible:

- Autonomy: AI agents operate independently once activated. They don’t need constant human prompts to keep running.

- Perception: They gather input from APIs, tools, databases or user environments.

- Decision-making: Using logic, goals and sometimes learned behavior, they decide what to do next.

- Memory: There are two types of memory in an AI agent:

- Short-term memory helps track what just happened (for example, what task was completed).

- Long-term memory stores information for reuse (for example, user preferences or past outcomes).

- Action/execution: Agents can perform steps across tools, like updating a CRM, sending a Slack message or generating a report.

- Feedback loop: Some agents can adjust based on results or errors (for example, retry a failed API call or take an alternate route).

Key features of agentic AI solutions (and what makes them different)

Agentic AI refers to this broader class of AI systems that behave with purpose. So let’s review what makes agentic AI solutions different:

- Continuous operation: AI agents don’t wait for input. They can run on a schedule, respond to triggers or stay active in the background.

- Autonomous decision-making: Agents can evaluate conditions and decide what to do next without human input.

- Memory: They remember past actions, user inputs and outcomes, which helps them make better decisions over time.

- Goal-seeking behavior: Agents aren’t just reactive — they’re outcome-oriented. They’ll try different steps to achieve a target.

- Self-improvement (in some cases): With access to feedback or performance data, agents can adjust workflows or actions to improve results.

Agentic AI models

Agentic AI models are what power an agent’s ability to reason, plan and take purposeful action. While the core components (like memory and decision-making) define what an agent does, the underlying models determine how it thinks through problems and chooses what to do next.

At the heart of these systems are reasoning models — advanced large language models or LLMs (often generative AI) designed for multi-step logic, planning and goal decomposition. These models give AI agents the ability to:

- Break tasks into smaller, manageable steps

- Sequence actions in a logical order

- Adjust plans based on feedback or changing inputs

Examples of agentic AI models include:

- OpenAI o3: A reasoning model built to help agents plan and execute multi-step tasks with logical sequencing.

- DeepSeek R1: Known for chain-of-thought reasoning, this reasoning model lets agents break down complex goals into actionable pieces.

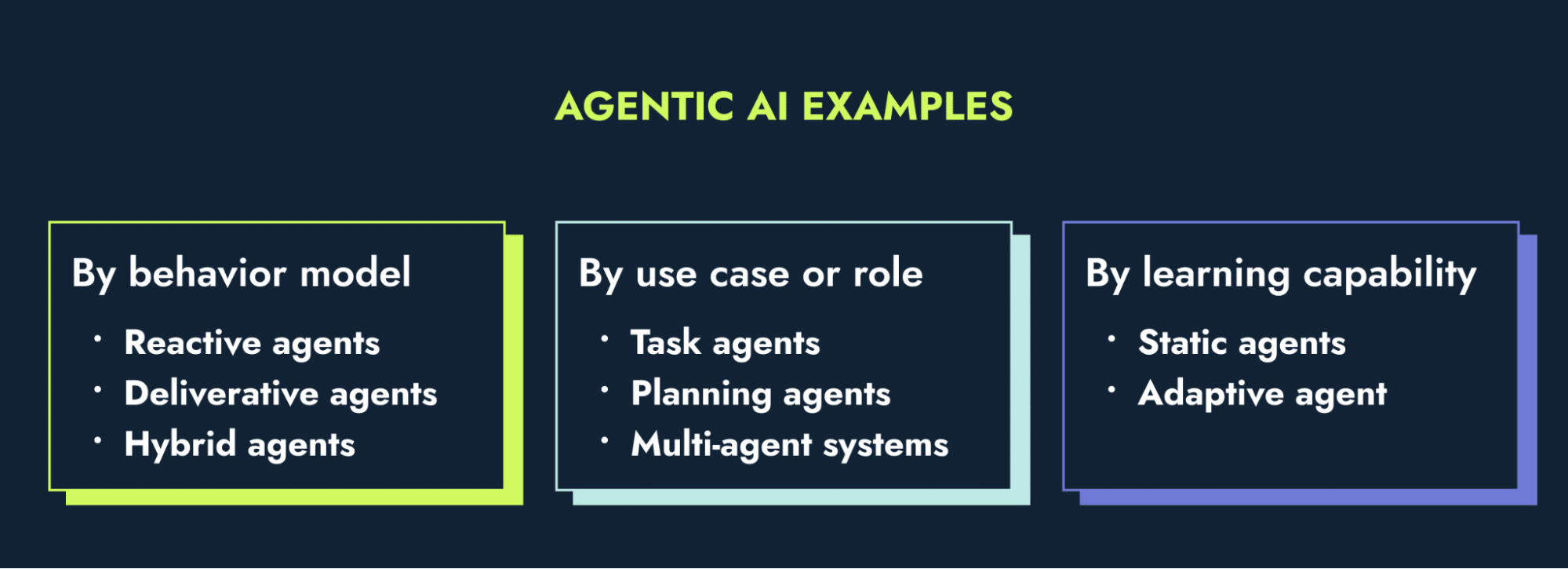

Types and examples of agentic AI systems

There are different types of agentic AI systems, depending on the goal, architecture and environment.

Use this information to identify the right approach for your use cases:

AI agent types by behavior model

Based on the behavior types, AI agents are of three types:

- Reactive agents: Fast, direct responses to inputs without using memory or learning

- Example: A bot that reroutes tickets based on keywords

- Deliberative agents: Decisions shaped by context, planning and anticipation of outcomes

- Example: An agent that schedules meetings based on availability and past user preferences

- Hybrid agents: A mix of reactive speed and deliberative planning, suited for complex enterprises or real-time environments

- Example: A lead routing agent that instantly assigns based on criteria but also revisits decisions if engagement changes

AI agent types by use case or role

Based on the use case, the types of AI agents are:

- Task agents: Repeated execution of a specific function

- Example: data cleanup or SEO report generation

- Planning agents: Multi-step or long-term outcomes-focused agents

- Example: orchestrating a SaaS product launch.

- Multi-agent systems: Networks of agents that work together to complete a larger goal, also called an AI agent workflow

- Example: Building an investor list — one agent pulls data, another cleans it, a third sends a summary

AI agent types by learning capability

There are two types of AI agents based on their learning capabilities:

- Static agents: Rules are fixed, no learning or adjustment without manual input

- Adaptive agents: Feedback-driven behavior that adjusts over time and can be rule-based or learning-enabled

AI agent vs AI assistant vs AI chatbot

AI agent, AI assistant and chatbot get used interchangeably, but they’re not the same. The differences matter when you’re deciding what kind of AI to implement in your workflow. Let’s solve the debate of AI agent vs AI assistant vs AI chatbot:

| AI tool’s feature | AI agent | AI assistant | AI chatbot |

|---|---|---|---|

| Definition | Autonomous decision-maker | Helpful interface layer | Rule-based responder |

| How it works | Acts toward goals, makes decisions and executes multi-step tasks | Responds to prompts using natural language processing (NLP) | Follows scripts or keyword triggers |

| Limitations | More complex to set up and monitor | Single-turn, prompt-dependent, not goal-seeking | Can’t adapt, can’t act on its own |

| Autonomy | High — can operate without prompting | Low — responds to input | None — fully reactive |

| Memory | Retains and applies long- and short-term context | Limited — often single-turn | None — stateless |

| Initiative | Can trigger actions independently | Doesn’t initiate, only responds | Can’t initiate actions |

| Complexity | Complex — requires reasoning models and orchestration | Moderate — built on LLMs | Simple to build and deploy |

| Learning/adaptation | Often includes a feedback loop | Minimal | None |

| Example use case | Understands ICP, routes qualified leads to sales and sends summary to CRM | Answers to simple prompts like “What’s on my calendar? | SEO content gap analysis or idea generator bot |

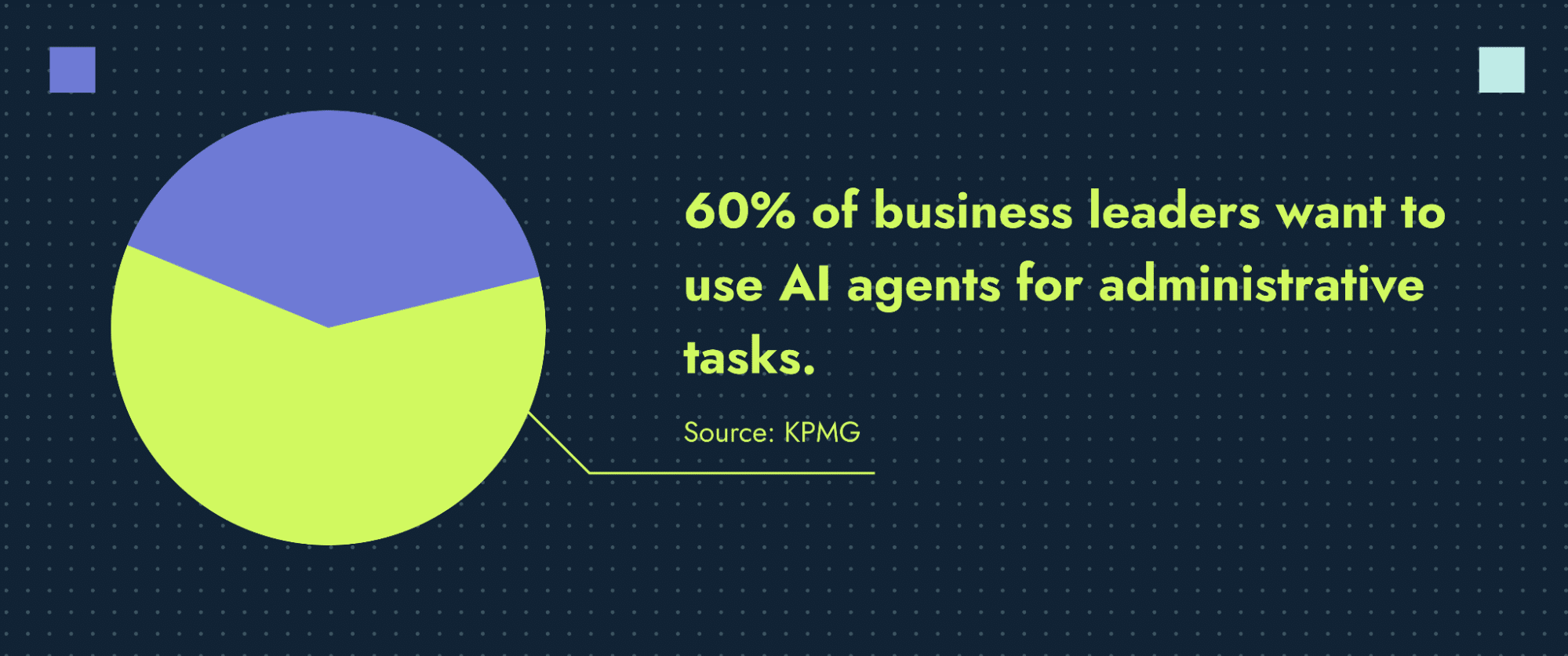

Common use cases for AI agents in SaaS

AI agents are especially valuable in SaaS marketing environments, where repetitive, rule-based and cross-functional tasks are everywhere. They help teams move faster, reduce manual load and maintain accuracy across systems without constant supervision. According to a KPMG report, 60% of business leaders want to use AI agents for administrative tasks.

Different teams can use AI agents in multiple ways:

Use cases of AI agents for marketing teams

An AI agent can solve a lot of marketing challenges for you, including:

- Campaign optimization: Monitor campaign performance based on your marketing KPIs and adjust budgets or targeting based on real-time data.

- Lead scoring: Analyze user behavior across channels and assign lead scores automatically.

- AI content writing and distribution: Schedule and personalize content pushes across channels based on user segments.

Read more: How CMOs can conquer AI marketing challenges

Use cases of AI agents for sales teams

Sales teams can use an AI agent for:

- CRM hygiene: Identify and fix duplicate or outdated records.

- Lead routing: Automatically assign leads based on rules like region, industry or engagement level.

- Outreach sequencing: Trigger personalized sales funnel sequences based on user behavior.

Use cases of AI agents for finance and operations teams

Agentic AI for finance and accounting can help with:

- Billing and finance automation: Reconcile invoices, flag anomalies and send payment reminders.

- Customer support automation: Escalate tickets based on sentiment or SLA status. Route requests across customer service teams.

- Internal workflows: Automate onboarding, asset provisioning or compliance checklists.

What is the pattern behind the most effective AI agent use cases?

The most effective use cases:

- Involve structured, multi-step tasks

- Are high-volume and high-impact

- Require coordination across tools (for example, CRM, Slack, Google Docs, billing platform)

According to a report, the AI agent market size is estimated to reach $52.2 billion in value by 2030. So, these use cases are only growing as the models advance.

How AI agents connect to your tech stack

Most AI agents integrate with your existing tech stack through:

- APIs: To read, write or modify data across tools like CRMs, analytics platforms or internal databases.

- Webhooks and triggers: To kick off actions when certain events happen, like a lead entering a new stage or a file being updated.

- Robotic process automation (RPA): In some cases, agents simulate user actions in tools that lack good APIs.

- Multi-tool orchestration: Agents string together actions across systems — for example, completing an SEO content audit by pulling data from Semrush, analyzing it and then sending a summary to Slack.

Where humans fit in agentic AI applications

AI agents aren’t meant to run wild — human oversight is essential, no matter how advanced the agent is. Here’s where people fit in the agentic AI applications:

- Approval gates: Agents pause to request human approval before performing sensitive or irreversible actions (for example, sending emails, issuing refunds).

- Fallbacks for edge cases: When the agent encounters uncertainty, exceptions or ambiguous input, it escalates to a human.

- Monitoring and observability: Teams should track what agents are doing — via logs, dashboards or audit trails — to ensure accuracy and make improvements over time.

- Course correction and enrichment: Humans review and refine agent outputs, add critical context and make adjustments to ensure results meet business goals, brand standards and changing conditions.

How to choose the best agentic AI platform

Depending on your complexity and security needs, different agentic AI platforms may make sense:

| AI agent tool | Best for | Example |

|---|---|---|

| Zapier, Make, n8n | Quick setup, low-code workflows | Marketing automations, CRM updates |

| LangChain, AutoGen, CrewAI | LLM orchestration, planning, agent collaboration | Multi-agent reasoning, retrieval-based agents |

| Custom orchestrators (Productive Shop’s AI agent solution) | Enterprise-grade security and scalability | Internal tools connecting proprietary systems |

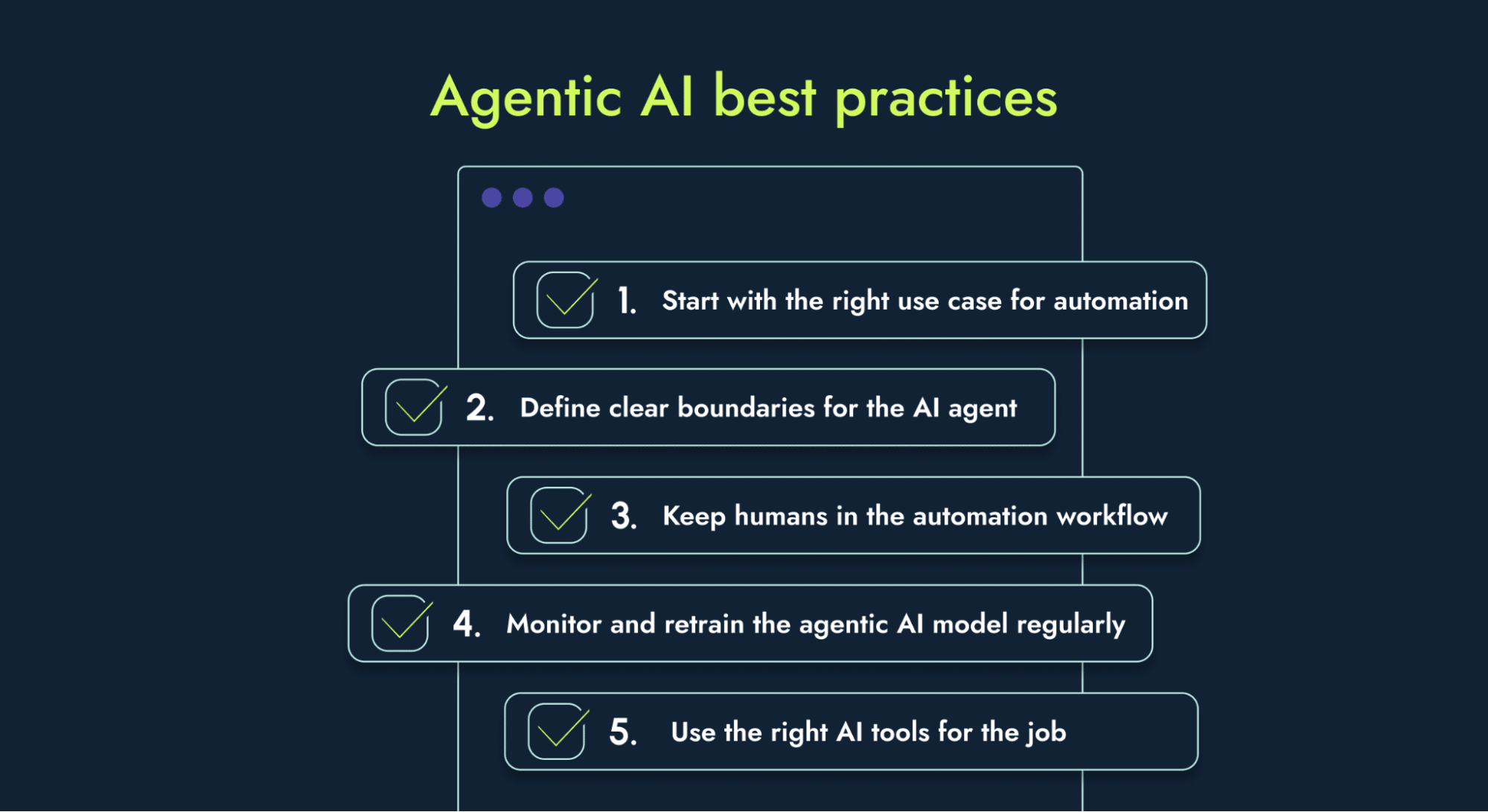

Best practices for using agentic AI tools

Building or adopting AI agents isn’t just about plugging them in. It’s also about using them wisely. Poorly scoped or unsupervised agents create more mess than value. Follow these best practices to keep your agentic AI tools effective and manageable.

1. Start with the right use case for automation

Don’t overcomplicate AI agents. Begin with:

- High-volume, repetitive tasks like SEO technical crawling

- Clear goals and outcomes

- Low risk of failure (example: internal processes, not customer-facing tasks)

2. Define clear boundaries for the AI agent

Agents need constraints. Make sure you:

- Set limits on what tools or data they can access

- Define specific triggers and conditions for action

- Log all activity for transparency

3. Keep humans in the automation workflow

Autonomy is useful, not absolute. Always:

- Require approval for sensitive or external-facing actions

- Have fallbacks or escalation paths for exceptions

- Review performance regularly

4. Monitor and retrain the agentic AI model regularly

Agents are not fire-and-forget. You should:

- Track output accuracy and execution quality

- Adjust prompts, rules or logic based on performance

- Retrain if using learning-enabled models or dynamic tasks

5. Use the right AI tools for the job

Choose your stack based on:

- The complexity of workflows

- Your team’s technical ability

- Security, observability and scalability needs

Are AI agents safe? Risks, bias and guardrails

Agentic AI systems come with power and risk. When you’re giving AI the ability to act without asking for permission every time, you need to think carefully about where things can go wrong and how to keep them in check.

What can go wrong with agentic AI?

- Hallucination: Agents using LLMs can fabricate facts or make decisions based on faulty assumptions. For example, hallucinations are common in AI-generated content.

- Bias reinforcement: If an agent is trained on biased data or uses discriminatory rules, it can amplify those issues at scale.

- Autonomy overreach: Agents without proper constraints can act outside their intended scope, leading to errors or even compliance violations.

- Lack of explainability: It can be hard to trace how an agent reached a decision — especially if it’s using multiple tools or reasoning steps.

How to secure AI agents and follow compliance

Agents can access sensitive systems. For the best agentic AI security, make sure you:

- Use secure authentication and restrict access to minimize the risk of unauthorized actions

- Enable detailed logging and observability to support audits

How to create accountability for agent deployment

Even if an AI agent “decides” something, the responsibility still falls on the humans who deploy and oversee it. Accountability best practices include:

- Clear ownership within your team

- Change logs and audit trails

- Manual overrides and kill switches

Explore AI-powered agentic workflows with Productive Shop

Agentic AI is the new way to scale execution, reduce manual work and build smarter systems that adapt. And according to Box’s State of AI report, 87% of companies have started using AI agents of some kind, while 41% are leveraging advanced, fully autonomous operations that deliver much higher productivity gains.

You don’t wanna be left behind.

Our team helps tech companies design, deploy and scale AI-powered systems that work. From building agentic workflows and setting up orchestration layers to crafting the right prompts and guardrails, we make sure your AI agents are secure, reliable and ROI-driven.

Let’s talk about what AI agents can do for your business processes and how to make them work for you.

Frequently asked questions

Is ChatGPT considered an AI agent?

No, ChatGPT is not an AI agent. It’s an AI assistant. It only responds when prompted and can’t initiate actions, pursue goals or operate across tools without human input. It lacks autonomy, a core trait of agentic systems.

Do custom GPTs qualify as agentic AI systems?

No, custom GPTs don’t qualify as agentic AI systems on their own. Custom GPTs are still AI assistants unless they’re connected to external tools, given access to data and programmed to take multi-step actions independently. With orchestration, they can power agents but they’re not agents by default.

Can AI agents think or feel? Are they sentient?

No, AI agents are not sentient. They do not understand or feel anything. They simulate decision-making by processing inputs and following logic trees. Any appearance of reasoning or emotion is just pattern generation, not awareness.

Should I worry about AI agents replacing human jobs?

Absolutely not. AI agents are more likely to replace specific tasks, not full jobs. They’re ideal for automating repetitive, structured work, like routing leads, updating records or sending follow-ups.

Roles that involve judgment, creativity or collaboration still rely heavily on people.

Read more: The future of content teams

How do AI agents reflect or reinforce societal bias?

AI agents reinforce bias when built on biased data or rules. For example, if an agent is trained on biased hiring data or product feedback skewed by demographics, it can unintentionally replicate unfair patterns. That’s why diverse data, oversight and testing are critical.

When an AI agent makes a mistake, who’s responsible?

The humans who designed, deployed or approved are responsible if an AI agent makes a mistake. AI agents are not legally or morally accountable. If something goes wrong — from biased output to a financial error — the responsibility falls on the team that built and monitored the system.

Where does human oversight fit into an agentic system?

Human oversight is required at every stage of the agentic system. From defining the AI agent’s scope to reviewing its outputs, people are responsible for setting limits, monitoring results and stepping in when needed. Agentic AI should never run without checks.